How we built an audio analysis pipeline that measures confidence, clarity, and communication —and the consistency problems we had to solve along the way

The Real Reason Candidates Fail Interviews

Most candidates don’t fail interviews because they lack knowledge.

They fail because they hesitate when they shouldn’t. They speak too fast when nervous. They use filler words excessively. They don’t listen to the actual question being asked. They sound uncertain even when they know the answer.

These are soft skills — confidence, clarity, communication, listening, professional demeanor. They matter enormously in hiring decisions, but they’re notoriously difficult to measure. Even two human interviewers rarely agree on the same soft skill scores for the same candidate.

For AI, this is even harder. Traditional LLMs analyzing transcripts can tell you what someone said, but not how they said it. Tone, hesitation, pace, emotional control — these live in the audio, not the text.

This is how we approached the problem.

Why Transcripts Alone Aren’t Enough

Our first attempt was straightforward: take the interview transcript, feed it to an LLM, ask for soft skill scores.

It didn’t work well.

The model could identify filler words in text (“um”, “like”, “basically”), but it couldn’t distinguish between a confident pause and a hesitant one. It couldn’t detect when someone’s voice wavered. It couldn’t tell if the candidate was speaking too fast because they were nervous or because they were excited about the topic.

A transcript of “I led the project and delivered it on time” looks identical whether spoken with conviction or mumbled uncertainly.

We needed to analyze the actual audio.

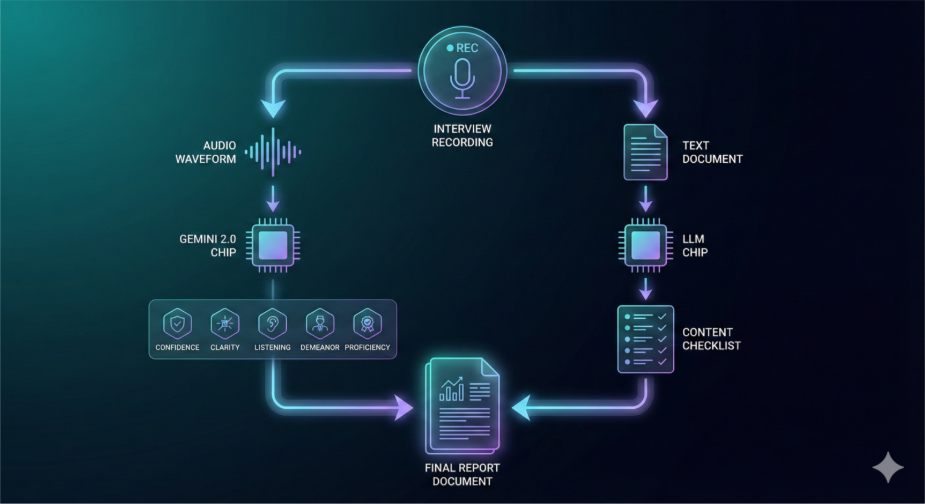

The Two-Pipeline Architecture

Our evaluation system runs two parallel pipelines as part of our enterprise AI implementation

Pipeline 1: Audio Analysis → Soft Skills

Raw audio goes directly to Gemini 2.0 Flash as multimodal input. The model analyzes vocal characteristics — tone, pace, confidence markers, hesitation patterns, clarity of speech — and outputs scores for five core parameters.

Pipeline 2: Transcript Analysis → Content Evaluation

The conversation transcript goes to a separate LLM pass that evaluates the actual content — whether answers are correct, how well they’re structured (using frameworks like STAR for behavioral questions), technical accuracy, problem-solving approach.

The two pipelines run in parallel, and results are combined into a comprehensive evaluation report.

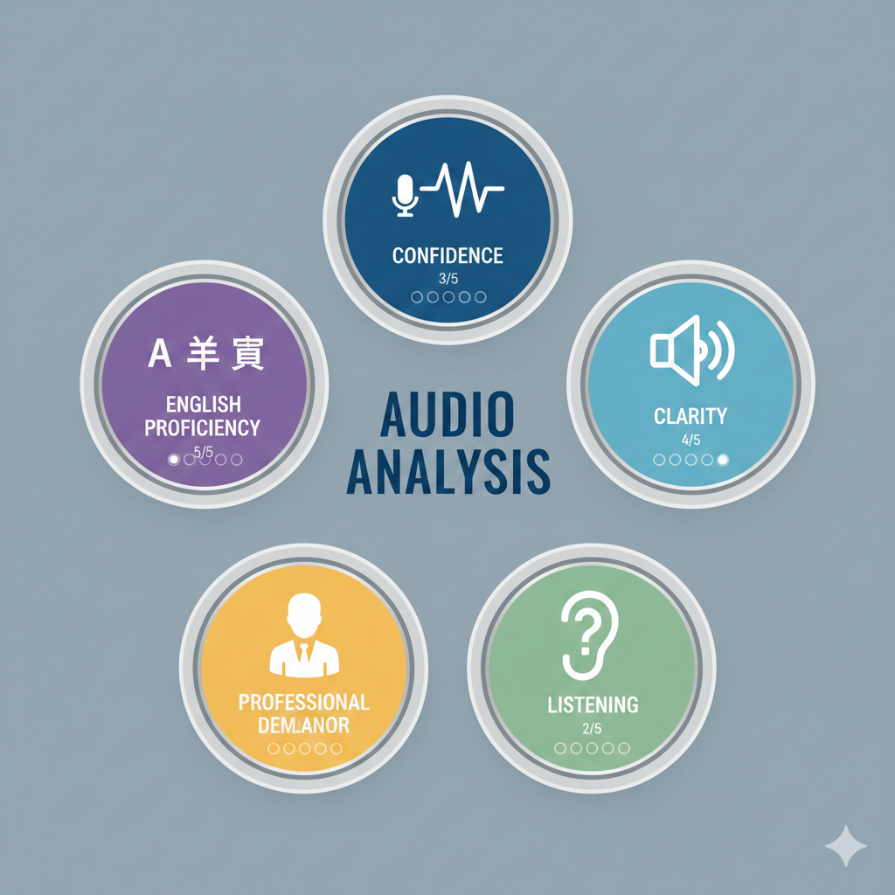

The Five Core Soft Skills We Measure

After testing various combinations, we settled on five parameters that can be reliably detected from audio:

- Confidence

Voice stability, conviction in responses, assertiveness. Does the candidate sound like they believe what they’re saying?

- Clarity

Articulation quality, pronunciation, how easy it is to understand what they’re saying. Not about accent — about whether words are clearly formed.

- Listening Skills

How well answers connect to questions asked. Does the candidate actually address what was asked, or do they give a rehearsed answer that doesn’t quite fit?

- Professional Demeanor

Tone appropriateness, emotional composure. Can they maintain professionalism even when asked challenging questions?

- English Proficiency

Grammar in spoken language, vocabulary range, fluency. Particularly relevant for our context — Indian universities preparing students for global job markets.

How Multimodal Analysis Works

Gemini 2.0 Flash accepts audio files directly as input. We upload the interview recording, and the model processes both the semantic content and the paralinguistic features in a single pass.

Paralinguistic features include:

- Tone variations throughout the response

- Speaking pace and pace changes

- Hesitation markers (pauses, restarts, filler sounds)

- Volume and energy levels

- Emotional cues

The model doesn’t just transcribe — it “listens” to how things are said.

One technical note: Gemini’s Files API doesn’t accept webm format directly (which is what we get from browser recordings). We use ffmpeg to convert webm to wav before uploading.

ffmpeg -i interview.webm -acodec pcm_s16le interview.wav

The audio file gets uploaded via the Files API, and the file ID is passed to Gemini. Direct upload of large audio files in the prompt isn’t practical.

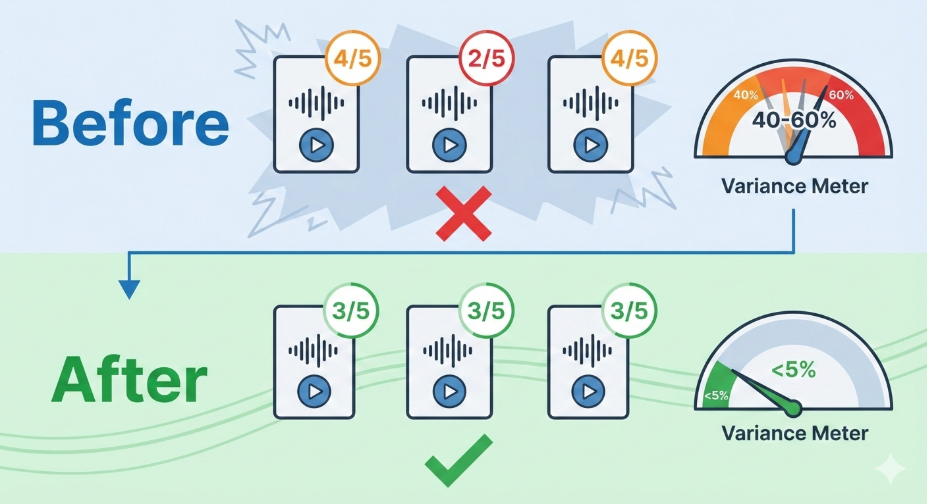

The Consistency Problem

This is where things got difficult.

Our first attempts at soft skill scoring were wildly inconsistent, highlighting the challenges of LLM infrastructure consistency. The same audio file, with the same prompt, would produce different scores across runs.One run might rate confidence as 4/5, the next as 2/5.

The variance was 40-60% across runs. Unusable for any real evaluation system.

We tried several fixes:

Attempt 1: Better prompts

We wrote detailed rubrics. We added examples. We specified exactly what each score level meant.

It helped, but not enough. The model would interpret the rubric differently across runs.

Attempt 2: Temperature tuning

Lower temperature reduces randomness. We dropped it significantly.

It helped somewhat, but soft skill scoring requires reasoning, not just pattern matching. Too low a temperature made the evaluations feel mechanical and missed nuance.

Attempt 3: The 1-5 scale trick

This made the biggest difference.

Instead of asking for scores on a 1-10 scale (too granular, too much room for interpretation), we switched to 1-5 with very explicit definitions for each level:

| Score | Meaning | Hiring Signal |

| 1/5 | Well Below Expectations — fundamental gaps, confusion, inability to engage | Strong rejection |

| 2/5 | Below Expectations — limited understanding, lacks depth, struggles with follow-ups | Likely rejection |

| 3/5 | Meets Expectations — adequate competency, relevant responses, baseline capability | Acceptable hire |

| 4/5 | Exceeds Expectations — strong command, compelling examples, clear impact | Strong hire |

| 5/5 | Far Exceeds Expectations — exceptional mastery, thought leadership, significant achievements | Exceptional hire |

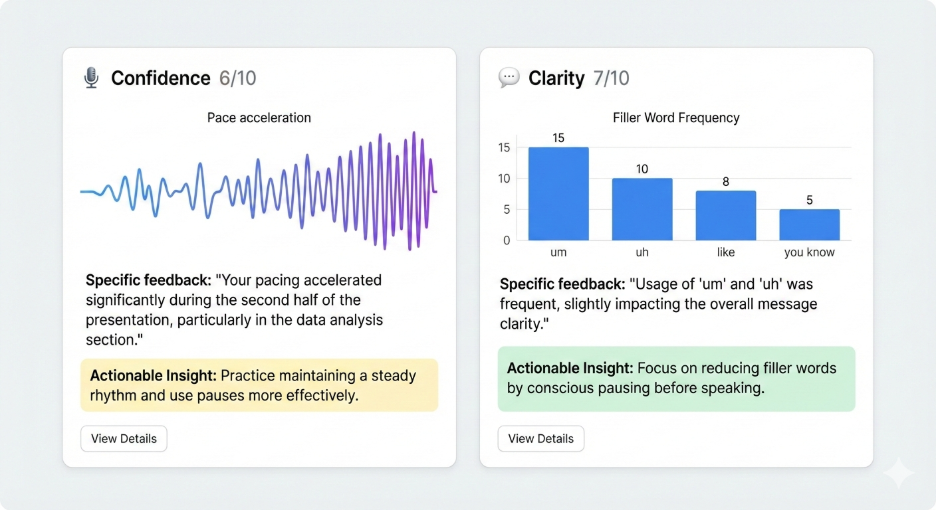

We then normalize to 10 for the final report (multiply by 2), but the model reasons on the 1-5 scale.

Why does this work? Smaller scales with strong contextual anchors give the model less room to drift. “Is this a 7 or an 8?” is a harder question than “Does this meet expectations or exceed them?”

Combined effect:

With all three improvements — detailed rubrics, tuned temperature, and the 1-5 scale — variance dropped from 40-60% to under 5%.

Same audio, multiple runs, nearly identical scores.

What the Output Looks Like

The evaluation produces both scores and actionable feedback. Not just “Confidence: 3/5” but explanations like:

“Your pace is mostly stable, but accelerates noticeably when answering technical questions. This can signal nervousness even when your content is correct. Practice slowing down by 10-15% during technical explanations.”

Or:

“You use filler words (‘basically’, ‘like’) primarily during scenario-based questions. This suggests you may benefit from practicing structured thinking frameworks like STAR before speaking.”

The feedback is specific to what was observed in the audio, not generic advice.

Processing Time and Cost

For a 15-minute interview:

- Processing time: Roughly 15 minutes (runs asynchronously; candidate is notified when complete)

- Cost: Approximately ₹3.43 (~$0.038) per evaluation

The async processing works for our use case. Students complete a mock interview, go do something else, and come back to a detailed evaluation. Universities run batch evaluations overnight.

If real-time soft skill feedback during the interview becomes a requirement, we’d need to rethink the architecture. But for post-interview evaluation, async is fine.

What’s Still Not Perfect

Accent handling: The model generally handles Indian English accents well, but some regional variations still cause occasional misinterpretation of confidence signals. A speaking pattern that’s culturally normal might be flagged as hesitation.

Background noise: Noisy environments affect accuracy. We don’t do preprocessing to filter noise, which means evaluations of recordings made in loud environments are less reliable.

Very short responses: Single-word or very brief answers don’t give the model enough signal to evaluate soft skills meaningfully. We flag these as “insufficient audio for evaluation” rather than guessing.

Code-switching: Some candidates switch between English and Hindi mid-sentence. The model handles this, but soft skill evaluation for code-switched speech is less consistent than for single-language responses.

Why This Matters for Universities

Placement officers at universities have always known that soft skills matter. They can see it — the student who knows everything but freezes in interviews, the one who’s technically average but interviews brilliantly. thanks to AI in education insights.

But they couldn’t measure it at scale. You can’t have faculty sit through thousands of mock interviews and provide detailed soft skill feedback.

Now they can see:

- Which students struggle with confidence despite strong technical knowledge

- Who needs to work on clarity vs. listening vs. pace

- How individual students improve over multiple practice sessions

- Cohort-level patterns (maybe third-years consistently struggle with professional demeanor)

Soft skills stop being an “invisible” part of placement readiness and become something trackable and improvable.

Summary

Measuring soft skills from audio is no longer experimental. With multimodal models that can process audio directly, we can detect confidence, clarity, listening ability, professional demeanor, and language proficiency from how candidates speak, not just what they say.

The hard part wasn’t the AI — it was consistency. Getting reliable, reproducible scores required careful attention to scoring scales, rubric design, and temperature tuning.

This approach powers our post-interview evaluations. It’s not real-time coaching (yet), but it gives candidates specific, actionable feedback on communication skills that text-based systems simply can’t provide.

The goal isn’t to replace human judgment. It’s to give every student access to detailed soft skill feedback — the kind that used to require a personal mentor watching you practice.

Partner with 47Billion for Enterprise-Grade Interview Intelligence

At 47Billion, we provide end-to-end enterprise solutions for AI-powered interview evaluation systems:

- Comprehensive Architecture: Audio analysis → Multimodal processing → Soft skill scoring → Feedback generation

- Validated Blueprints: Gemini 2.0 Flash integration, consistency optimization, and cost-efficient pipelines

- Production-Grade Features: Batch processing, async workflows, multi-language support, and audit trails

Explore scalable, reliable interview intelligence for placement and recruitment: 47Billion Solutions