The world of hiring has a quiet gatekeeper that most job seekers rarely see but almost always encounter: the Applicant Tracking System. For decades, these systems have filtered millions of resumes based on keyword matches, formatting rules, and rigid criteria. But here is the uncomfortable truth—when everyone starts using ChatGPT to craft the perfect resume, the old rules stop working.

This is the story of how I rebuilt our ATS scoring system from the ground up, tackling the unique challenge of evaluating freshers in an era where every resume looks polished, every keyword is optimized, and traditional metrics have lost their meaning.

The Problem with Traditional ATS Scoring

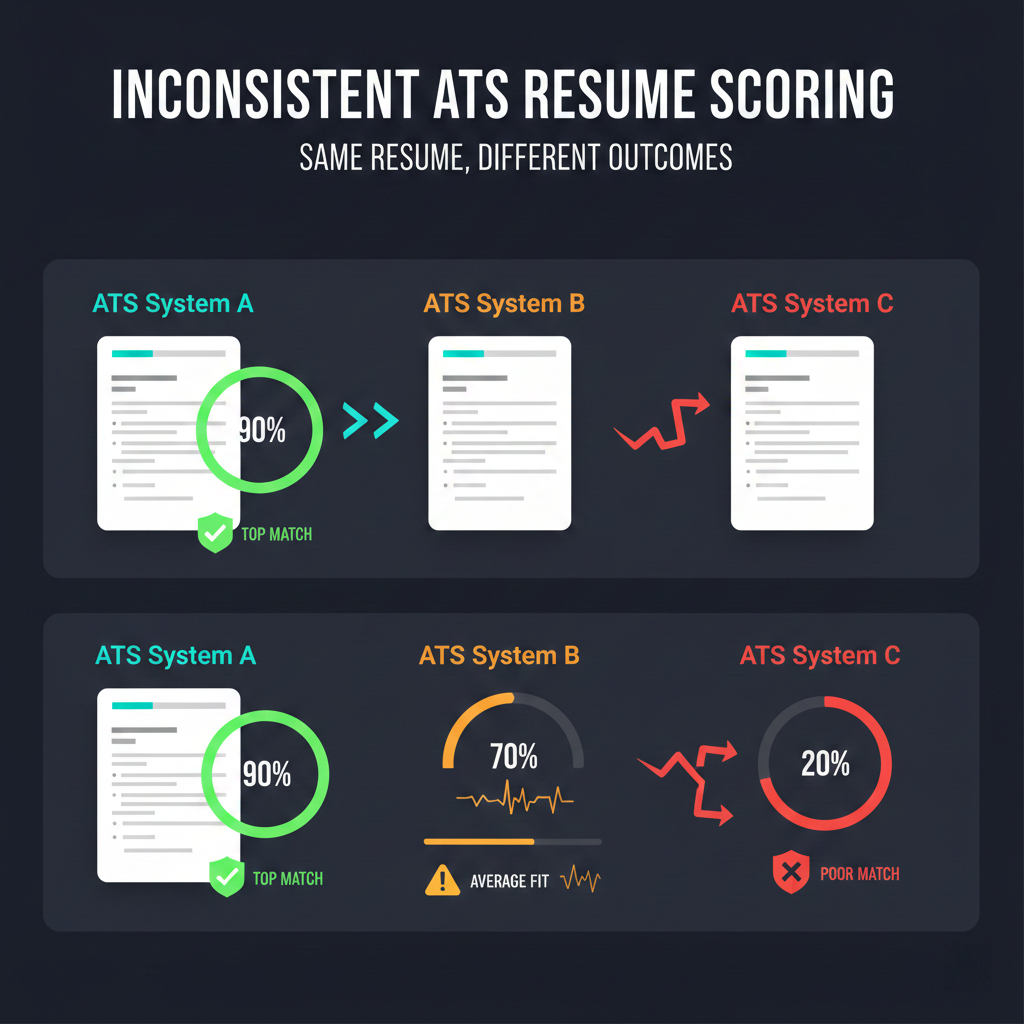

When I began exploring ATS solutions for our education-to-employment platform, the 7Seers job-readiness platform revealed a frustrating reality. I tested multiple popular tools—Enhance CV, Jobscan, and several others—with the same resume. The results were wildly inconsistent. One platform scored a resume at 80 percent while another rated the same document at 30 percent.

The inconsistency revealed a deeper issue. Each tool operated on its own proprietary criteria, and none of them were designed with freshers in mind. A student who had never held a professional job would inevitably score poorly on systems built to evaluate years of experience and corporate achievements.

Another limitation was that many tools considered job descriptions only as an optional, paid feature rather than a core part of candidate evaluation.

Why Keyword Matching No Longer Works

The pre-LLM era of ATS scoring relied heavily on keyword matching, format compliance, and the presence of industry-standard terminology. The logic was straightforward if a job description mentioned “Python” and your resume contained “Python,” you earned points.

Then came ChatGPT, and the game changed overnight.

Today, students generate professionally formatted resumes with all the right buzzwords in a matter of minutes. The result is a homogenization problem. When every resume looks structurally identical and contains the same optimized keywords, how does an HR professional or an automated system distinguish between candidates?

This shift forced me to fundamentally rethink what ATS should actually measure. Instead of surface-level pattern matching, I needed to build something that understands the essence of what a job description demands and the substance of what a candidate offers.

The Journey from Scoring to Grading

My initial instinct was straightforward: feed the job description and resume into an LLM and ask it to generate a score. The approach made sense in theory but failed in practice. LLMs are not deterministic. Even with identical inputs, they can produce varying outputs.

I experimented extensively with parameters designed to reduce this variability—adjusting temperature settings, controlling seed values, modifying top-k and top-p parameters. Nothing worked reliably. The scores fluctuated by seven to eight points across identical runs.

The breakthrough came from an unexpected direction. Instead of asking the LLM to output precise numerical scores, I restructured the problem around grades.

The system now assigns grades—A, C, or E—based on project relevance and experience alignment. A student with projects directly relevant to the job description receives an A grade. Someone with projects in adjacent areas or unrelated domains but demonstrating initiative receives a C. Students without any project work receive an E.

Each grade maps to a configurable weight, and these weights can be adjusted to create appropriate leniency for fresher evaluations. The combination of categorical outputs and flexible weighting finally delivered the consistency that numerical scoring could not achieve.

The Two-Call Architecture

The system is built as a carefully structured evaluation flow that addresses several non-trivial challenges in candidate assessment. It first resolves inconsistencies in how skills and requirements are expressed, a step that is essential but difficult to implement reliably at scale. It then analyzes how a candidate’s experience, projects, and overall profile align with the role’s expectations, which requires nuanced interpretation rather than straightforward matching.

Beyond core evaluation, the system incorporates additional engagement signals that are hard to quantify but important for differentiation, particularly for early-career candidates. Bringing these components together in a consistent and reliable way required significant design and iteration, leveraging our AI & ML services for intelligent candidate evaluation to ensure both accuracy and scalability.

Making the System Fresher-Friendly

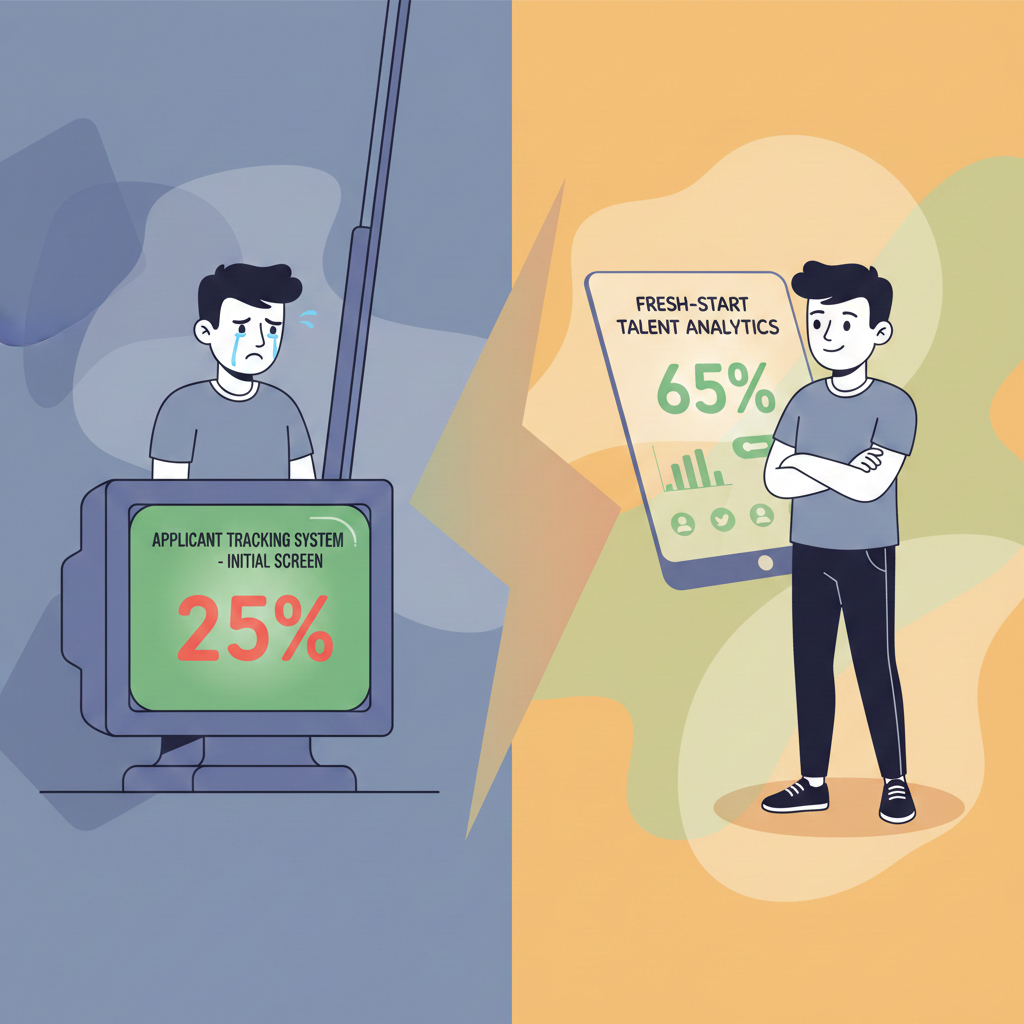

One of the most important design decisions I made was calibrating the system specifically for early-career candidates. Traditional ATS tools routinely score fresher resumes at 20 to 30 percent—numbers that make talented students appear unemployable and give companies no meaningful way to identify potential.

By adjusting the weights assigned to each grade, I targeted an average score range of 60 to 70 percent for freshers who have demonstrated relevant skills and project work. A score of 30 to 40 percent in this system genuinely indicates a poor fit, while the same score on a traditional platform might simply reflect the absence of corporate work history.

This calibration serves both students and employers. Students receive feedback that reflects their actual readiness for specific roles. Companies can set meaningful cutoffs that do not automatically exclude every recent graduate.

The Road Ahead: Soft Skills and Contextual Intelligence

The conversation around ATS scoring is evolving rapidly. As AI handles more routine coding and analytical tasks, soft skills become increasingly valuable differentiators. Leadership experience, collaboration patterns, communication ability—these qualities matter enormously but are notoriously difficult to extract from resumes.

I’m currently exploring whether LLMs can detect signals of these capabilities. A mention of serving as club president suggests leadership. Descriptions of team projects indicate collaboration. The phrasing of achievement statements reveals communication style.

Another frontier I’m investigating is automatic detection of whether a job description targets freshers or experienced professionals. Rather than applying a single weighting scheme globally, the system could analyze the JD itself and adjust its evaluation criteria accordingly.

Lessons for Building LLM-Powered Evaluation Systems

Several insights emerged from this implementation that I believe apply broadly to anyone building LLM-based scoring or evaluation systems.

First, categorical outputs often outperform numerical ones when consistency matters. Asking an LLM to choose between A, B, and C is more reliable than asking it to assign a score between 1 and 100.

Second, context dramatically improves accuracy. Normalizing skills in isolation produces different results than normalizing them within the context of a specific job domain. The same acronym can mean different things in different fields, and surrounding information helps the model make correct interpretations.

Third, hybrid architectures work. Using LLMs for tasks they excel at—semantic understanding, normalization, contextual reasoning—while handling mathematical calculations and business logic in traditional code creates systems that are both intelligent and predictable.

Conclusion

The ATS of yesterday was a keyword filter. The ATS of tomorrow must be a contextual evaluator—one that understands what roles actually require, recognizes potential in candidates without traditional credentials, and adapts its criteria to the reality that polished formatting no longer signals anything meaningful.

For platforms serving students and freshers, this evolution is not optional. The old systems were never built for them. The new systems must be.

What makes this work particularly relevant is its acknowledgment that the rules changed when LLMs became widely accessible. The same technology that allows students to generate perfect resumes can also power systems that look beyond surface polish to evaluate genuine fit and potential.

In a world where everyone can look qualified on paper, the systems that help us identify who actually is qualified become more important than ever, supported by AI‑powered EdTech solutions for student outcomes.

Final Takeaways

- Traditional ATS scoring is highly inconsistent, with different tools giving vastly different scores for the same resume.

- Keyword matching has become obsolete as LLM-generated resumes have homogenized formatting and terminology.

- Grading systems (A, C, E) deliver far more consistent results than numerical scoring with LLMs.

- Skill normalization using LLMs eliminates the need for maintaining massive skill taxonomy databases.

- Fresher-friendly calibration through configurable weights ensures students are evaluated on potential, not just experience.

- Hybrid architectures combining LLM intelligence with traditional logic transform resume evaluation into meaningful, context-aware candidate matching for the AI generation.

Share this on –