How we built a real-time voice AI system for mock interviews using a modular architecture that enables scalable interview practice, full transcript access, and post-interview evaluation.

The Challenge of One-on-One Mock Interviews

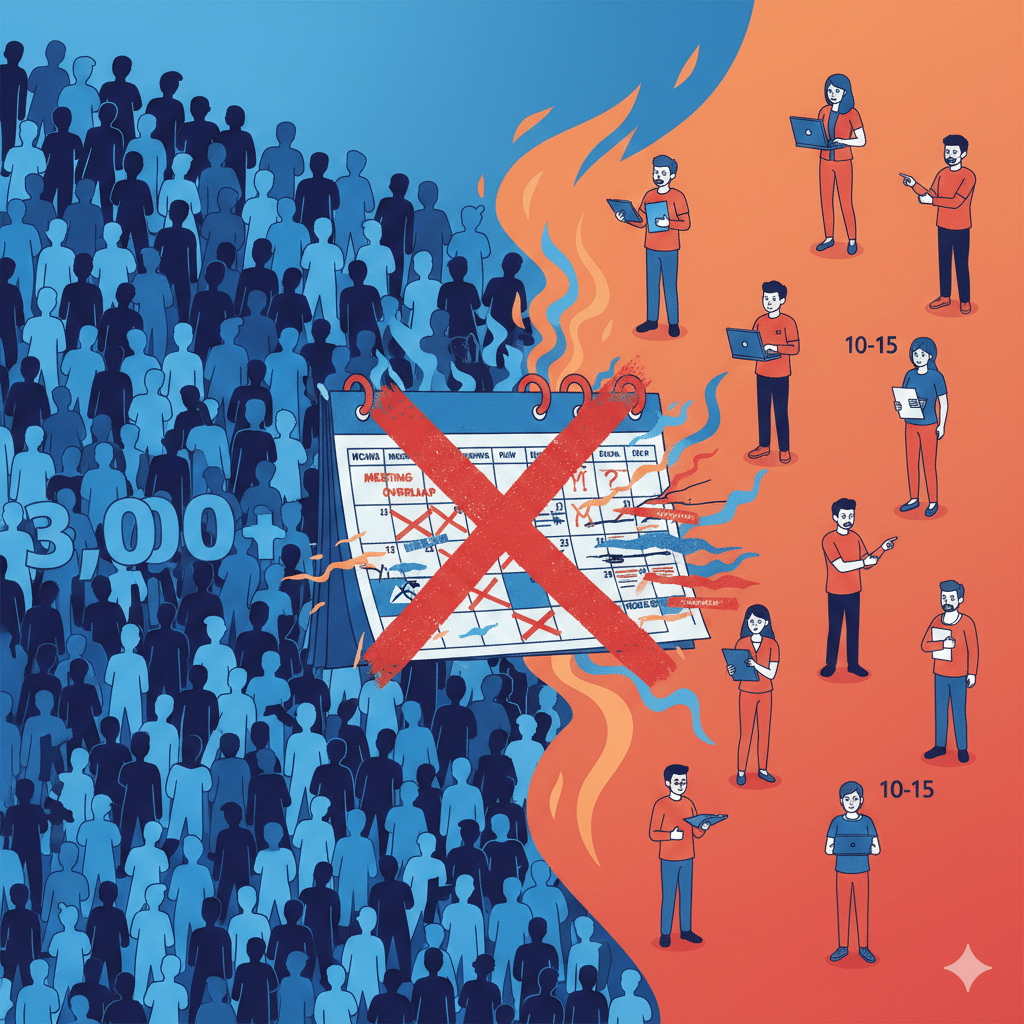

Traditional mock interviews face a fundamental scaling problem that no amount of good intention can solve.

Consider a typical engineering college: 3,000 students preparing for placement season, 10–15 faculty members willing to conduct mock interviews, and a 4-week window before recruiters arrive. Even if every faculty member dedicated three hours daily exclusively to mock interviews—which is unrealistic—each student might get 15–20 minutes of practice. Once. Maybe twice if they’re lucky.

Then comes coordination overhead: scheduling across departments, managing cancellations, matching student backgrounds with faculty expertise, and arranging rooms. The process quickly becomes unmanageable. As a result, most placement cells default to group sessions, which don’t provide the same learning value as individual practice.

The students who need practice most—those who struggle with articulation or confidence—often avoid mock interviews altogether due to fear of judgment.

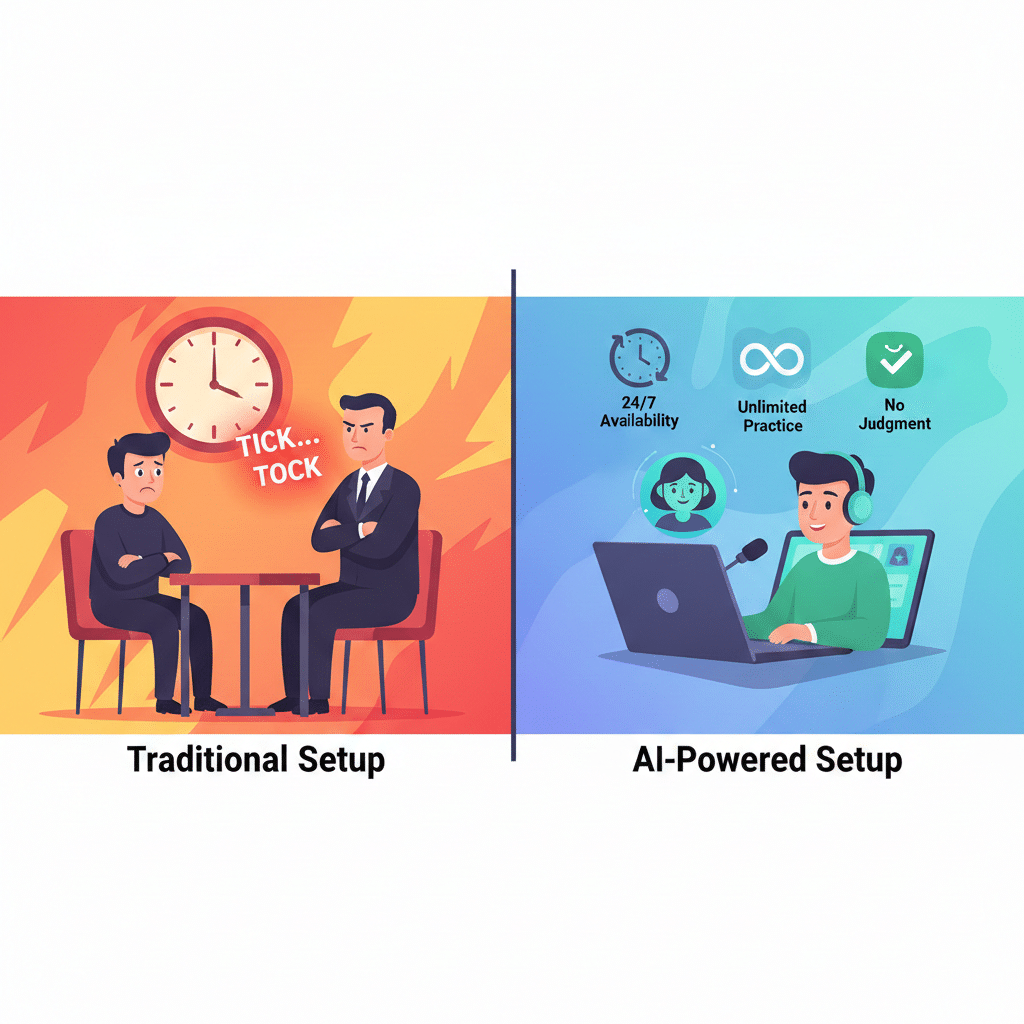

This is where AI-powered mock interviews using real-time voice AI fundamentally change the equation.

An AI interviewer has no scheduling constraints. It’s available 24/7, never fatigues, and allows students to practice repeatedly without social pressure. Instead of one interview per student, institutions can offer unlimited practice. Instead of generic questions, interviews can be role-specific and aligned to real job descriptions. Instead of fleeting verbal feedback, students receive structured, written evaluations they can revisit.

The question wasn’t whether mock interview AI would be useful—it was whether we could build one realistic enough to prepare candidates for human interviewers.

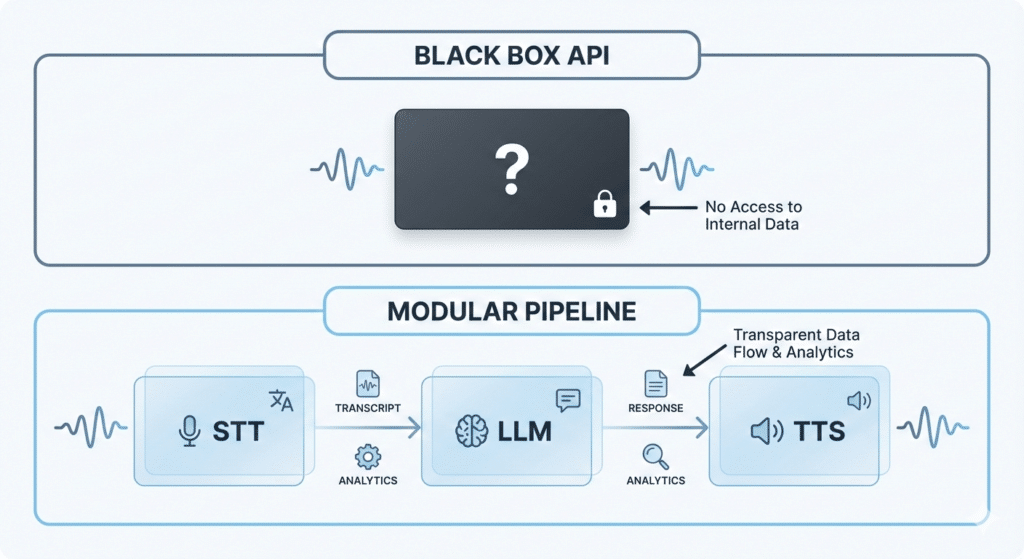

Why a Modular Voice AI Pipeline Was Necessary

Most integrated speech-to-speech AI APIs operate as black boxes: audio in, audio out. While impressive, they lack key capabilities required for our use case.

We needed:

- User transcript access for evaluating content, structure, and clarity

- Raw audio access to analyze hesitation, tone, and confidence

- Cost efficiency at scale, as universities require thousands of sessions

These requirements led us to build a modular voice AI pipeline, giving us control over every stage of the STT–LLM–TTS pipeline.

Approximate Cost Comparison

| Approach | Approx. Cost (15-min session) |

|---|---|

| Integrated speech-to-speech APIs | $0.60 – $2.00+ |

| Modular voice AI pipeline | ~$0.30 |

The modular approach requires more engineering effort, but delivers long-term scalability, cost control, and evaluation-grade data access.

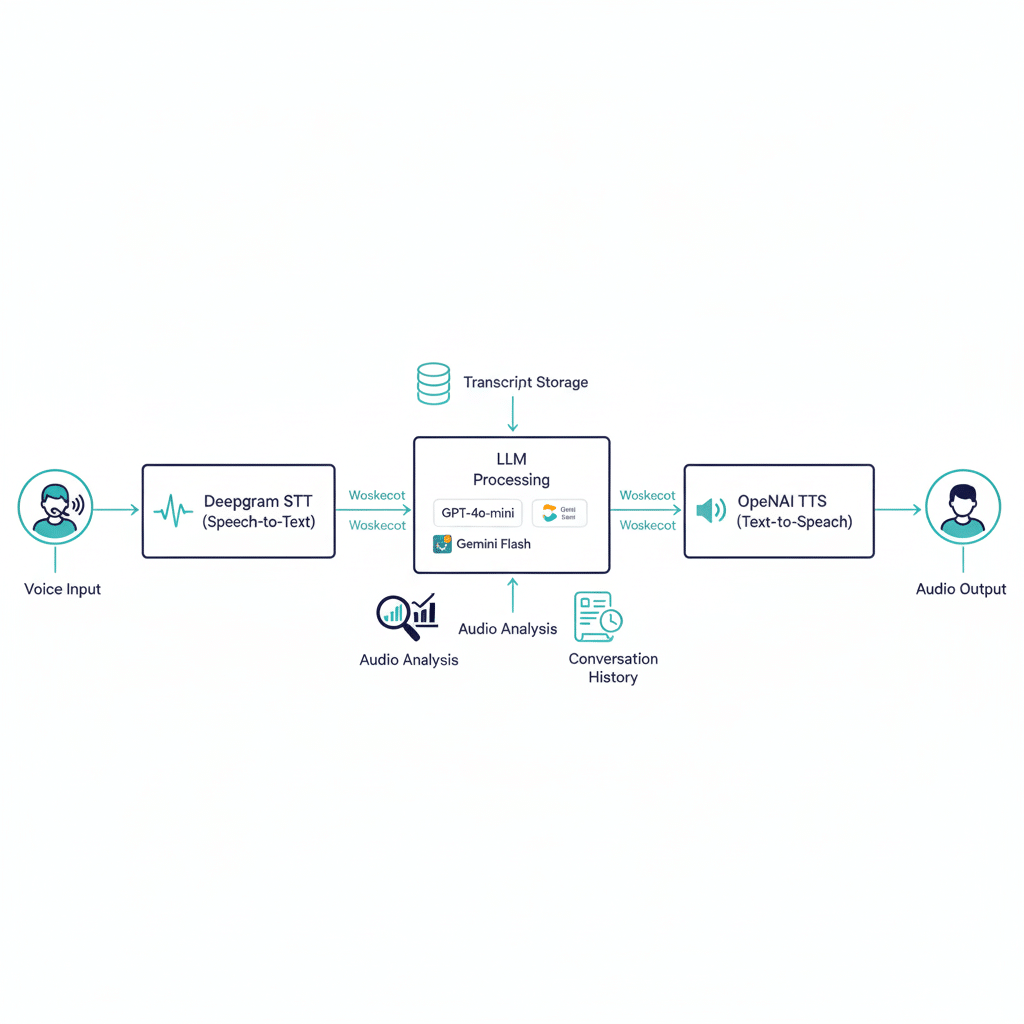

Real-Time Voice AI Architecture

Pipeline overview:

User Audio → Speech-to-Text (Deepgram) → LLM (GPT-4o-mini / Gemini Flash) → Text-to-Speech (OpenAI TTS) → AI Audio

The flow is sequential, but response streaming minimizes perceived latency. Tokens from the LLM are streamed directly to TTS to keep conversation responsive.

Component Selection in the Voice AI Stack

Speech-to-Text (STT): Deepgram

We evaluated Whisper, AssemblyAI, and others. Deepgram performed best for:

- Accuracy with Indian English accents

- Low latency

- Pricing that scales for high-volume voice AI systems

Its WebSocket API provides interim transcripts for real-time UI feedback, while final transcripts trigger LLM responses.

LLM Layer: GPT-4o-mini & Gemini Flash

- GPT-4o-mini handles real-time conversational flow and follow-up questions

- Gemini Flash powers pre-interview question generation and post-interview evaluation

Prompt engineering was sufficient—no fine-tuning required.

Text-to-Speech (TTS): OpenAI

OpenAI TTS offered the best balance between latency and voice quality. While clearly synthetic, users report they adapt quickly, making it suitable for interview practice AI.

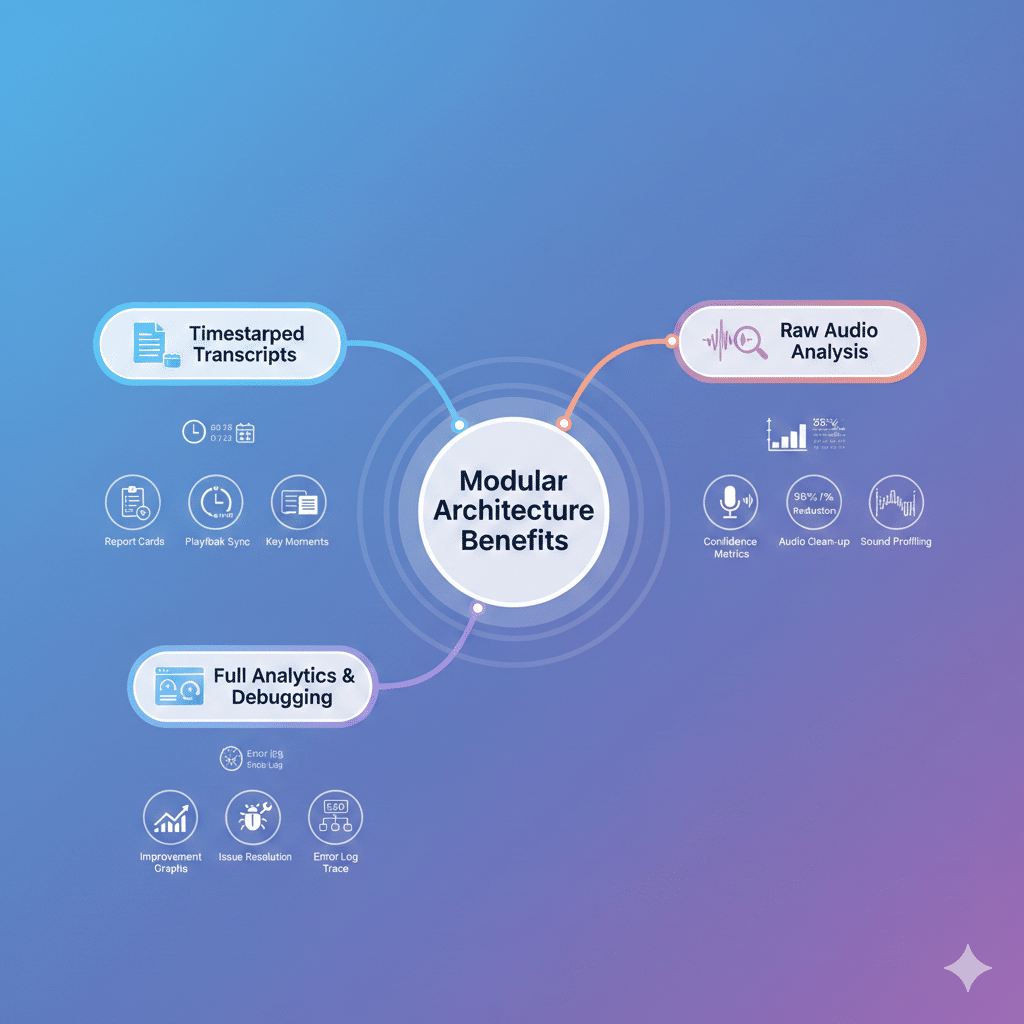

What the Modular Voice AI Architecture Enables

The key advantage is not just cost—it’s data access:

- User transcripts (timestamped, queryable)

- Raw audio files for multimodal analysis

- Full conversation history for analytics, evaluation, and debugging.

These capabilities are essential for building a serious AI interview system, not just a chatbot.

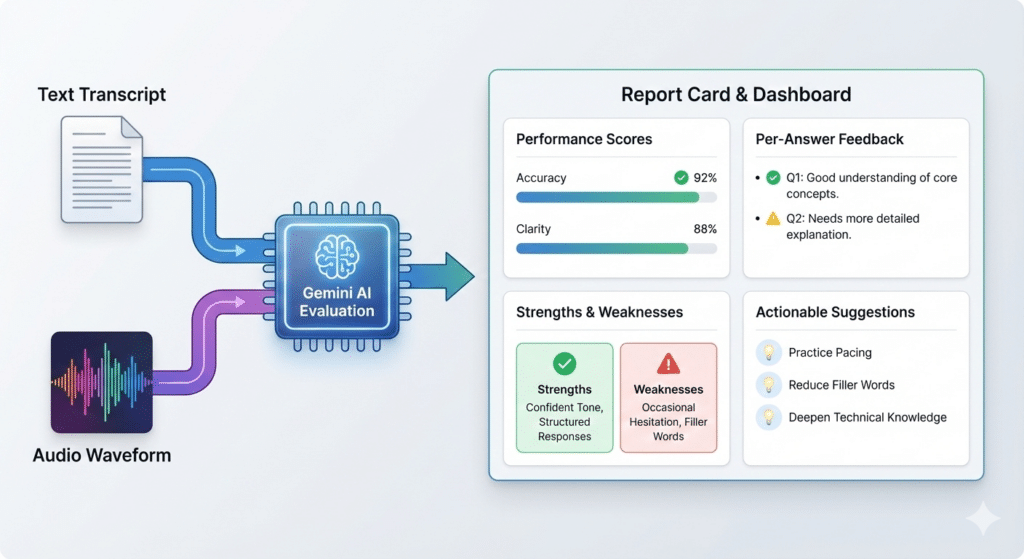

Post-Interview Evaluation Using Multimodal AI

After each interview, we run evaluation using Gemini’s audio-native capabilities, sending both transcript and raw audio.

The evaluation produces:

- Performance scores (technical, communication, clarity)

- Per-answer feedback

- Strengths and weaknesses summary

- Actionable improvement suggestions

Audio analysis captures signals transcripts miss—hesitation, filler words, pacing, and confidence. This evaluation layer is the core reason we avoided integrated speech-to-speech APIs.

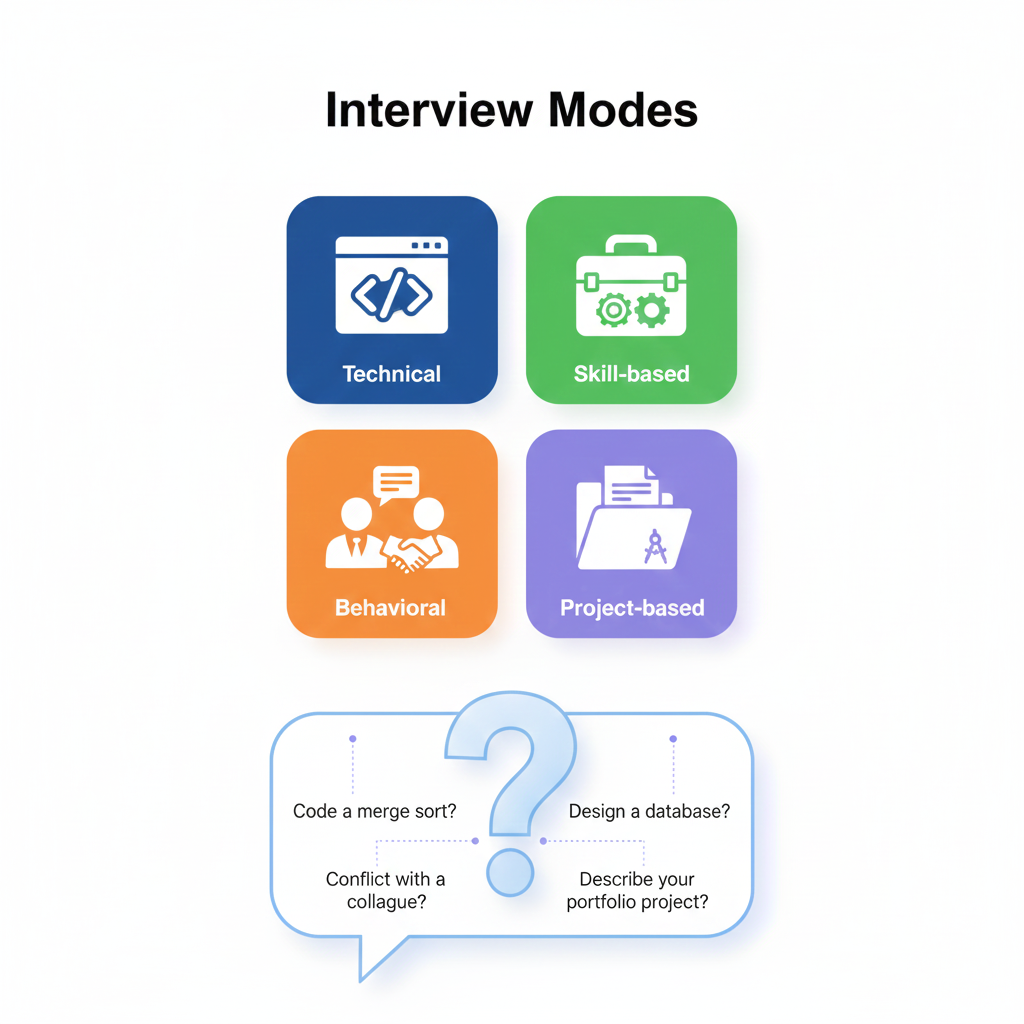

Question Generation with Mode-Based Architecture

Early feedback showed questions felt generic.

The fix was a mode-based question generation system:

- Technical interviews

- Skill-based interviews

- Behavioral/management interviews

- Project-based interviews

Questions are generated before the interview based on the selected mode and then delivered conversationally. This change dramatically improved relevance and user satisfaction.

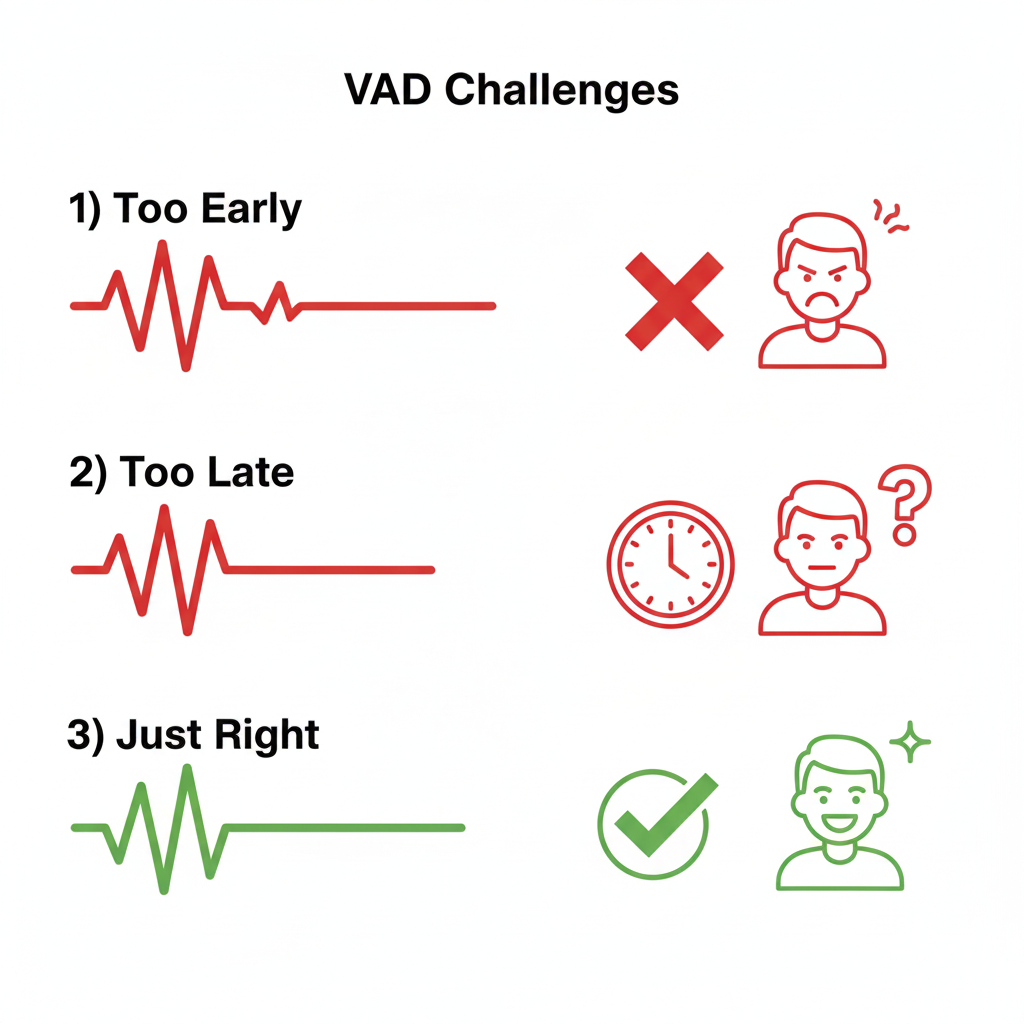

Voice Activity Detection (VAD): An Unexpected Challenge

Detecting when a user finishes speaking proved difficult.

- Early triggers interrupted users

- Late triggers caused awkward silences

We tested Silero VAD and LiveKit VAD. LiveKit introduced memory issues at scale, so we currently use a tuned Silero implementation, with plans to revisit WebRTC-based solutions as tooling matures.

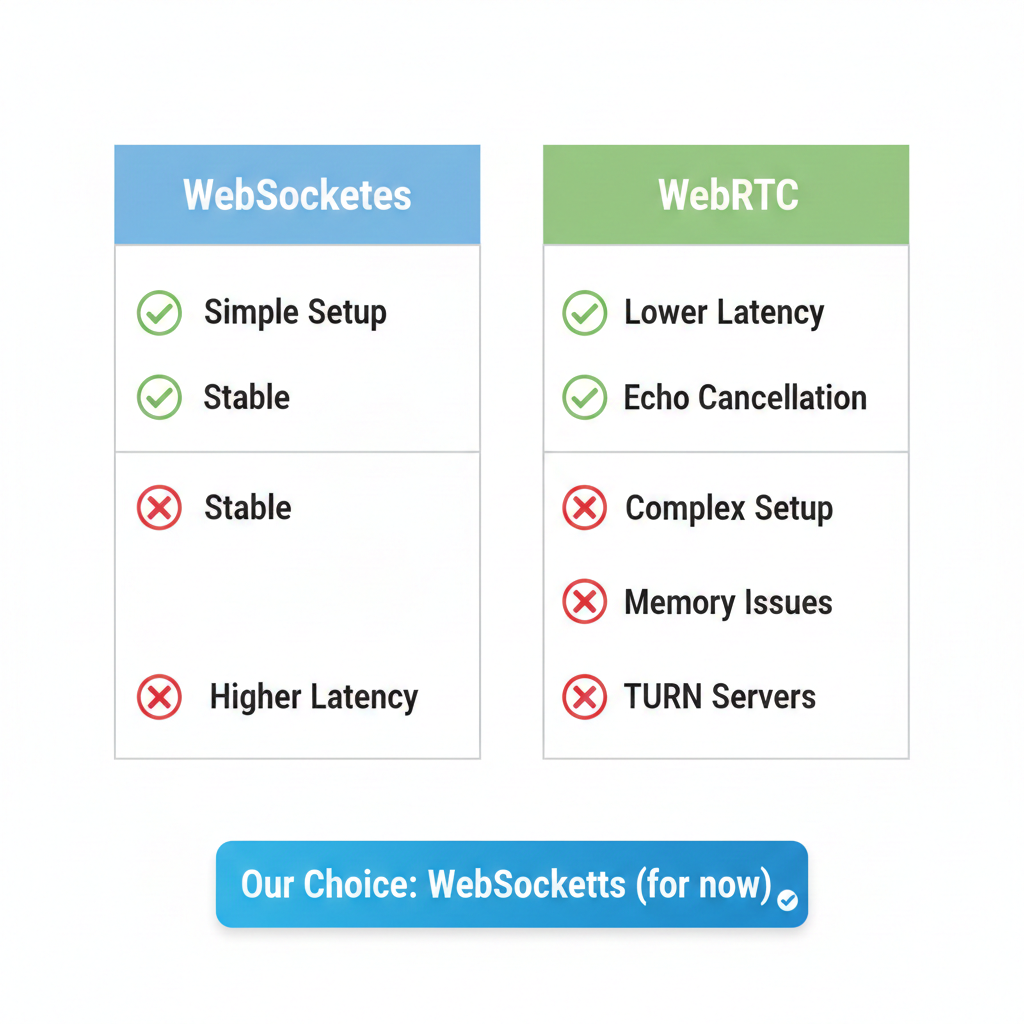

WebSockets vs WebRTC for Voice AI

We evaluated both transport layers:

WebRTC pros: lower latency, echo cancellation

WebRTC cons: TURN servers, operational complexity, memory issues

We chose WebSockets for stability and acceptable latency, with plans to adopt WebRTC once infrastructure matures.

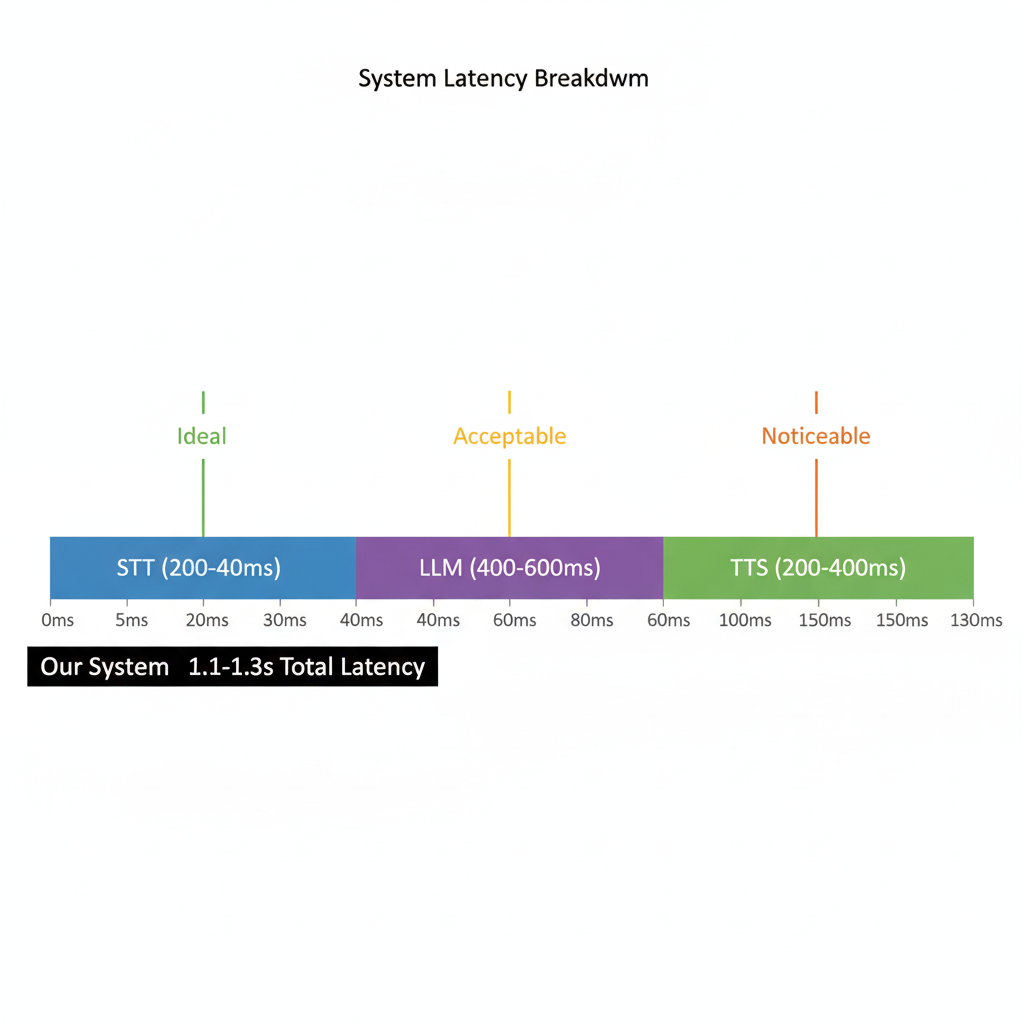

Voice AI Latency: Benchmarks vs Reality

Research benchmarks show:

- <275ms: ideal

- 500ms: acceptable

- 1s: noticeable

Our system achieves ~1.1–1.3 seconds end-to-end latency:

- STT: 200–400ms

- LLM: 400–600ms

- TTS: 200–400ms

While not perfect, users report the experience feels realistic enough for interview practice. Every 100ms improvement noticeably enhances conversation flow.

Current Limitations

- Synthetic voice quality

- No barge-in handling

- VAD edge cases

- Latency above ideal conversational thresholds

Summary

We built a real-time voice AI system for mock interviews using a modular voice AI architecture: Deepgram for STT, GPT-4o-mini and Gemini Flash for reasoning, and OpenAI TTS for speech synthesis.

The modular approach enables transcript access, raw audio analysis, scalable costs, and high-quality post-interview evaluation—capabilities that integrated APIs struggle to provide.

If your use case requires analytics, evaluation, or compliance-grade access to conversation data, a modular voice AI pipeline offers unmatched flexibility.

Building Real-Time Voice AI for Mock Interviews Architecture, Challenges, and Lessons Learned

Partner with 47Billion for Enterprise-Grade Voice AI Solutions

At 47Billion, we provide end-to-end enterprise solutions for real-time voice AI systems across education, healthcare, and customer service domains:

Comprehensive Architecture: Speech-to-Text → LLM Processing → Text-to-Speech → Evaluation & Analytics.

Validated Blueprints: Deepgram STT, GPT-4o-mini/Gemini Flash orchestration, and cost-optimized modular pipelines (~50% cost reduction vs integrated APIs). Enterprise-Grade Features: Full transcript access, multimodal audio analysis, post- interaction evaluation, and compliance-ready conversation logging.

Explore scalable, data-driven Voice AI for interview practice, voice assistants, and conversational AI applications: 47Billion Solutions