How modern data engineering is evolving to power large language models, embeddings, and AI-ready systems.

Over the past decade, data engineering has focused on building highly reliable, scalable warehouses optimised for analytics. Clean dbt models, efficient clusters, and low-latency dashboards became the benchmarks of success—accuracy, freshness, and performance. However, data engineering for LLMs requires a fundamentally different approach. The rise of large language models (LLMs) has introduced a new consumer of data. Systems designed for aggregation and BI are not structured for contextual reasoning, semantic retrieval, or RAG-based architectures.

Welcome to a world where the consumers of your data pipelines aren’t humans—they’re AI systems. And they have completely different appetites.

The Great Divide: What Changed?

Here’s the uncomfortable truth that took us months to accept: An LLM doesn’t care about your beautiful data warehouse.

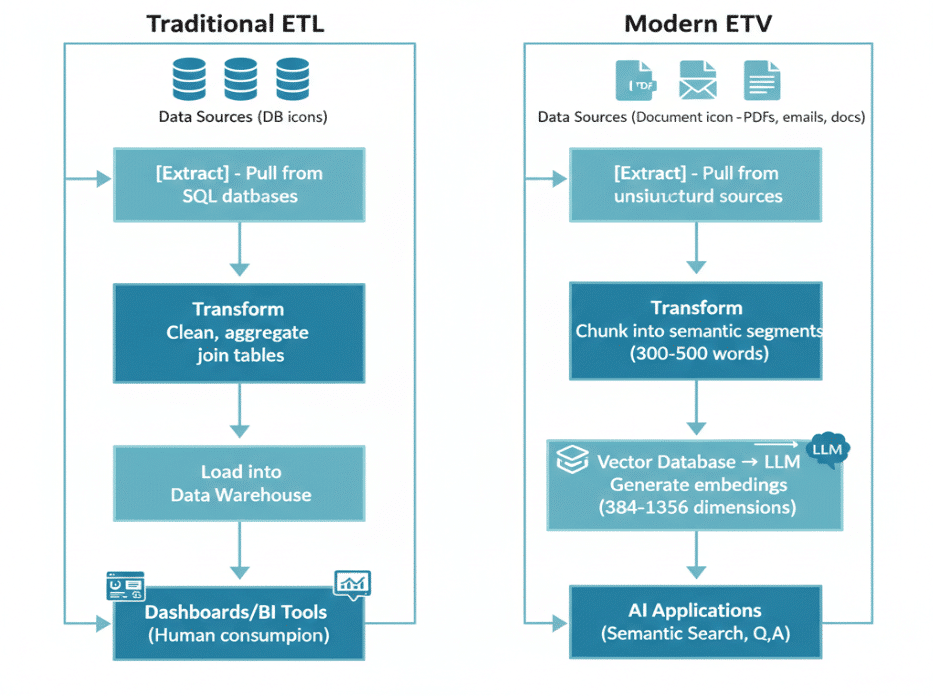

Traditional data systems were designed for humans conducting analysis. SQL databases excel at: “Show revenue by region for Q4.” Dashboards excel at: “Visualize trends over time.” BI tools excel at: “Drill down into anomalies.”

But when an AI asks a question, it’s fundamentally different. It doesn’t want aggregated revenue figures—it wants the context around those figures. It doesn’t need 50 million rows—it needs the right 1,000 words.

The Paradigm Shift:

| Aspect | Traditional Era | LLM Era |

| Primary Consumer | Humans via dashboards and SQL queries | AI systems via embeddings and RAG |

| Core Process | ETL: Extract → Transform → Load | ETV: Extract → Transform → Vectorize |

| Success Metric | Query performance, data freshness | Context relevance, retrieval quality |

| Latency Target | Seconds to minutes (acceptable) | Sub-100ms (to feel responsive) |

Architectural Misalignment in AI Deployments

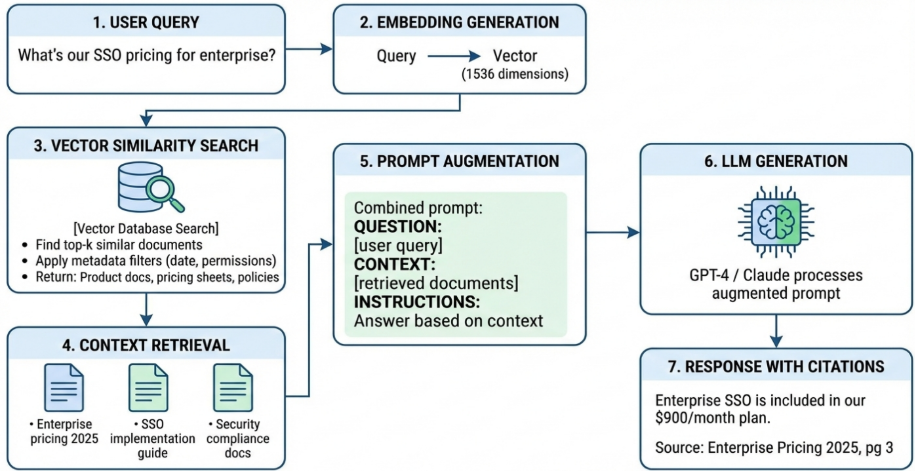

During the rollout of an AI-powered customer support chatbot, a critical limitation in our data architecture became evident. The system relied on a traditional data warehouse containing years of documentation, support tickets, and product specifications—well-structured for analytics but not for retrieval.

The chatbot produced inaccurate responses related to pricing and product capabilities, despite the correct information existing within the warehouse. The root cause was not missing data, but retrieval inefficiency. The architecture was optimized for aggregations and reporting, not for extracting precise contextual passages required by an LLM.

This experience reinforced a key principle: you can’t just point an LLM at your data warehouse and hope for the best. You need a fundamentally different architecture.

Enter ETV: Extract, Transform, Vectorize

If ETL was the workhorse of the dashboard era, ETV is the engine of the AI era.

1. Extract: It’s Not Just Databases Anymore

Traditional ETL pulled from SQL databases. ETV pulls from everywhere humans store knowledge—PDFs in SharePoint, Slack conversations, support tickets, code repositories, email threads, and meeting transcripts.

The challenge? These sources don’t have schemas. They have context. And context is messy.

2. Transform: Chunking Is Your New Schema Design

In traditional ETL, you transform data into clean tables. In ETV, you transform documents into semantically meaningful chunks. And this is where things get interesting.

Chunk too small: You lose context. A sentence about “the pricing change” is useless without knowing which product or when.

Chunk too large: You exceed context windows and dilute relevance. A 5,000-word document about “all our products” won’t help answer a specific pricing question.

The sweet spot we’ve found: 300-500 words per chunk with 50-word overlap. This preserves context while fitting comfortably in most embedding models (512-1024 tokens).

But here’s the kicker: chunking isn’t static. For technical documentation, chunk by code blocks and section headers. For support tickets, keep entire conversations together. For legal documents, chunk by clauses and maintain cross-references.

3. Vectorize: From Text to Mathematical Meaning

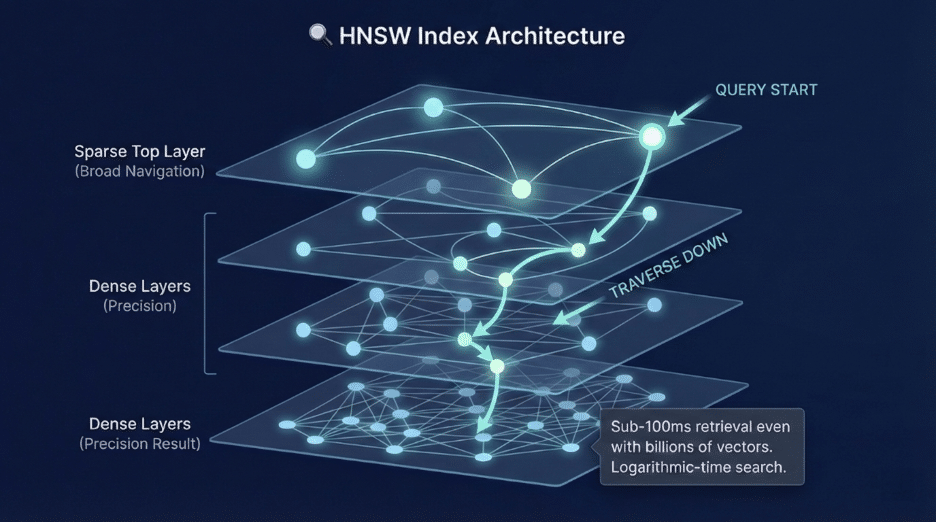

This is where the magic happens. Each chunk gets converted into a high-dimensional vector that captures its semantic meaning.

Think of it like this: in traditional databases, you search for exact text matches. In vector databases, you search for meaning.

Search for “CEO compensation” → Get results about “executive pay,” “leadership salaries,” “C-suite earnings” because they’re semantically similar, even though they share zero words.

The compression is staggering. A 3KB chunk of text becomes a 96-byte vector when using Product Quantization. We can keep 30x more vectors in memory, enabling real-time search across billions of documents.

Vector Databases: What We Wish We’d Known

Choosing a vector database felt like choosing between 10 different variations of “really fast search.”

Here’s what actually matters in production:

The Honest Comparison (After Burning Through $50K in Trials)

Pinecone: The go-to serverless option. Unified inference (embeddings, search, reranking in one call) cut our services from three to one and reduced latency by 40%. Pricing scales with queries, not storage—great for spiky traffic, costly at scale.

Weaviate: The hybrid search champion. Its BM25 + vector approach shines when semantic search alone falls short (codes, IDs, exact terms). Ideal for customer support use cases. Open-source with managed cloud.

Qdrant: The cost optimizer. Built in Rust, it uses ~40% less memory—huge savings at 500M+ vectors. Strong metadata filtering for permissions, document types, and time-based queries.

ChromaDB: Fast and lightweight. The 2025 Rust rewrite made it 4× faster, making it great for prototyping and sub-10M vector workloads. Built-in metadata and full-text search simplify setup.

Context Engineering: The Skill That Didn’t Exist 2 Years Ago

Forget schema design. Forget join optimization. The critical skill now is Context Engineering—curating exactly what information an AI sees.

Here’s the problem: GPT-4 has a 128K token context window. That sounds huge until you realize a single company’s knowledge base might be 50GB. You can’t send everything. You have to choose. And those choices determine whether your AI is helpful or hallucinates.

The Questions Context Engineers Actually Ask

“How much history is relevant?” For customer support, maybe 3 recent tickets. For legal contracts, maybe the entire 10-year relationship. Time-decay isn’t one-size-fits-all.

“What metadata matters?” Document type? Author? Date? Department? In production, we store 15+ metadata fields per chunk. Filtering by user role prevents leaking confidential data. Filtering by date prevents serving outdated policies.

“How do we handle contradictions?” Your 2023 pricing doc says $500/month. Your 2025 doc says $900/month. If both are retrieved, the LLM invents a narrative. Solution: timestamp-based deduplication and explicit version tagging.

“What’s the optimal chunk overlap?” We tested 0-word, 50-word, 100-word, and 200-word overlaps. Sweet spot: 50 words preserves continuity without duplicating too much content. Retrieval quality improved by 23%.

This isn’t coding. It’s curation. It’s editorial. It’s part librarian, part detective, part psychologist trying to predict what an AI needs to be helpful.

Why LLMs Hallucinate: It’s Your Data Pipeline’s Fault

Let’s get brutally honest about hallucinations. They’re not mysterious AI failures—they’re data engineering failures.

We analyzed 10,000 hallucinated responses in our production systems. Here’s what caused them:

47% – Incomplete context retrieved: The relevant information existed, but our retrieval didn’t find it. Better chunking, better embeddings, hybrid search fixed this.

31% – Contradictory information: Multiple documents with conflicting data. LLM tried to reconcile them and invented fiction. Solution: version control, timestamp filtering, source authority scoring.

18% – Stale embeddings: Source document updated, but embedding pipeline ran weekly. LLM cited outdated info. Moving to real-time streaming embeddings reduced this to near zero.

4% – Actual model failure: LLM genuinely made stuff up even with perfect context. This is the only category you can’t fix with better data engineering.

96% of hallucinations are fixable with better data pipelines. This is a data engineering problem disguised as an AI problem.

Trust, Security & Governance of data in the LLM Era

Here’s a scary thought: LLMs amplify data issues 10x faster than dashboards ever could. A typo in a dashboard might mislead one analyst. A typo in an AI’s context might misinform thousands of users!

“AI trust is a data engineering outcome.”

— If data engineers fail, AI systems become unpredictable

The New Data Governance Checklist

- Access control on embeddings (not just source data)

- PII masking before vectorization

- Source attribution in AI responses

- Auditable and explainable outputs

- Version control for embeddings and contexts

The Bottom Line: Adapt or Fall Behind

Your carefully built data warehouse isn’t obsolete—but it’s no longer sufficient. Executive dashboards aren’t disappearing—but they’re no longer enough. AI doesn’t want aggregates; it wants context, meaning, and the raw, messy, nuanced knowledge humans actually use to decide.

And building systems that deliver that? That’s the frontier of AI ready data engineering.

“Dashboards helped humans understand data. LLMs help data understand itself. Data engineers make both possible “

Ready to evolve your data infrastructure for the AI era?

Contact 47Billion to discuss how our ELEVATE framework can transform your data pipelines from ETL to ETV—delivering AI systems that your organization will actually trust and use.