Welcome to the second part of the blog series “The Symbiosis between Machine Learning and Blockchain.” In the previous blog, we discussed use cases of Machine Learning integrated with Blockchain. Please go through the blog.

In this blog, we will learn more about the challenges faced in machine learning and how integrating Blockchain into machine learning can solve those challenges.

As we know, access to Machine Learning algorithms is centralized with giant tech companies. They have control over an unprecedented amount of data in their data silos.

Here is a scenario! What if everyone – individual or organization- can contribute their private data and get compensated to create a database that preserves the privacy of the data yet allows AI models to train on them? It would provide data scientists with the required organized and labeled data. Decentralized Machine Learning (DML) can assist in making this feat possible.

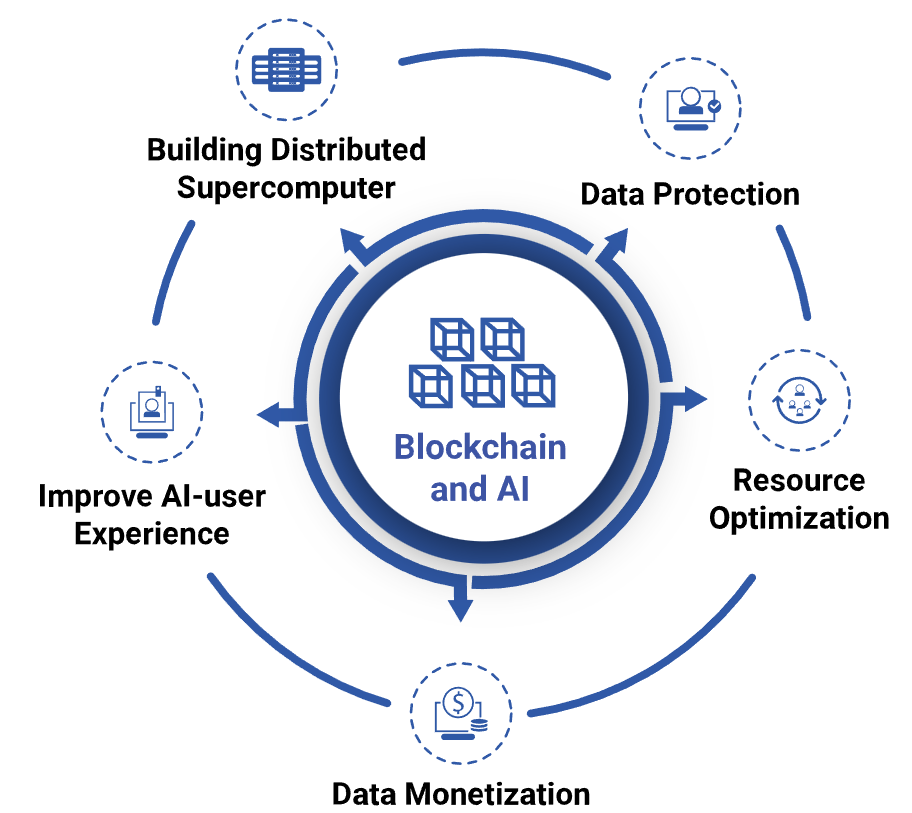

DML involves training a machine learning model on data from a blockchain-based data marketplace. It is an infrastructure that addresses issues related to centralization in AI. These include data privacy, centralized model training, and expensive computation.

Let’s see in detail how DML solves these problems. Decentralization can mainly affect ML’s three main building blocks: the data, the model, and the computing power.

We recommend a smart contract-based data marketplace where individuals get incentives to provide data. This data is referred to but is not limited to the medical and financial sectors, where data security and privacy are of utmost importance. Interested researchers can use this data with permission to train their ML models. Some of these data marketplaces are Datum, Ocean-Protocol, and BigChainDB.

Let’s discuss in brief how they work.

Datum

Datum Network provides a blockchain-based decentralized private data storage platform. Here the users can securely store their private data or the data extracted from edge devices like smartphones, IoT sensors etc. with which they interact. Here are some of its key features-

- Here the users can decide with whom to share the data.

- The Data scientists and researchers can request the data from the owners.

- Storing and monetizing data are powered by Datum’s native token, Data Access Token (DAT), based on the ERC-20 on Ethereum Mainnet.

- The data is stored as a key-value pair, ensuring data integrity, availability, low latency, and data searching.

- Data providers, data consumers, and storage nodes are the critical stakeholders of Datum and are suitably rewarded for their contributions.

- Data providers – They are rewarded for providing the data and giving permission to use it. They also get access to secure and infinitely scalable data storage.

- Data consumers – They get access to verified and validated data directly.

- Storage nodes – They host data storage and provide computation.

Let’s discuss how Datum works –

- The users’ data is divided into pieces, and the hash values of each piece are stored in the Blockchain using Datum SDK. It is because storing the entire data in Blockchain is expensive.

- It provides an immutable log of who held what data, when, and who should have access to or who contributed it.

- The main functionality is implemented through smart contracts and can be run on any blockchain that supports smart contracts, such as, ETH and NEO.

For more details on Datum, you can refer to its documentation.

Ocean Protocol

Ocean Protocol has a decentralized data marketplace where users can earn by publishing their data, staking on data (curate), and selling their data. They introduced an exciting concept where data owners can tokenize their dataset into ERC721 data-NFTs and ERC20 data tokens. Anyone who owns the Data-NFT owns that data and can decide who uses it and how to generate revenues from it. The data tokens are a medium to transfer data ownership.

Along with this, they provide a compute-to-data algorithm that lets the data stay on-premise of the user, yet (by consent of data owners) allowing researchers or data scientists to run AI models or any other computing jobs on the data without ever sharing the data itself.

Data owners can create a liquidity pool and launch an initial data offering (IDO) which allows data to be investable via ERC20 tokens. These tokens can help access the data or trade it to make profits. Every dataset now has data tokens that act as the underlying currency of the web 3.0 economy, the ocean token.

These are only some of the functionalities of Ocean-Protocol. To learn more please check out the documentation.

BigChainDB

BigChainDB is another example of a blockchain-based, scalable, and distributed ‘Big Data’ database.

It adds vital blockchain properties to this database. BigChainDB has smart features, such as

- Decentralization

- Byzantine fault tolerance

- Node diversity

- Immutability

- Native support of assets.

Let’s discuss how BigChainDB stores its data.

The data can be stored Off-Chain, i.e., in the third-party database management system. It helps keep track of permission records, request records, hashes of the document stored elsewhere, recording all handshake-establishing requests and responses between two off-chain parties.

Alternatively, On-Chain data storage is also an option here.

- We can encrypt the data, store the ciphertext on-chain and then use the public key of the ciphertext to send the data. The receiver can decrypt it by using the private key.

- We can also use proxy re-encryption to store and share our private data. A proxy player can generate and provide a “re-encryption key” to convert and share the data. The person who needs the data can decrypt the original data using his private key.

- Another method is erasure-coding. Here the data is split into n pieces, and each is encrypted using a different key. The encrypted parts are then stored on-chain. If k < N of the key-holders gets and decrypts k of the pieces, they can reconstruct the original plaintext.

To learn more about BigChainDB, please check out the documentation.

These are just some projects working on decentralized data storage and distribution. Researchers can use such tools to access private data to train machine learning models.

That was all about the first pillar of machine learning: data.

However, there are other aspects of DML: decentralized ML model training and decentralized computation power.

Please go through the next two blogs in the series to learn how integrating Blockchain in Machine Learning can solve challenges faced while training a machine learning model and the computation power used in it.