Moving from direct API calls to unified LLM infrastructure—and why it matters at scale

The Problems Nobody Warns You About

When we started building AI-powered products, the setup was simple. Pick a provider, add an API key, ship features. OpenAI’s API worked. We moved fast.

Then reality set in.

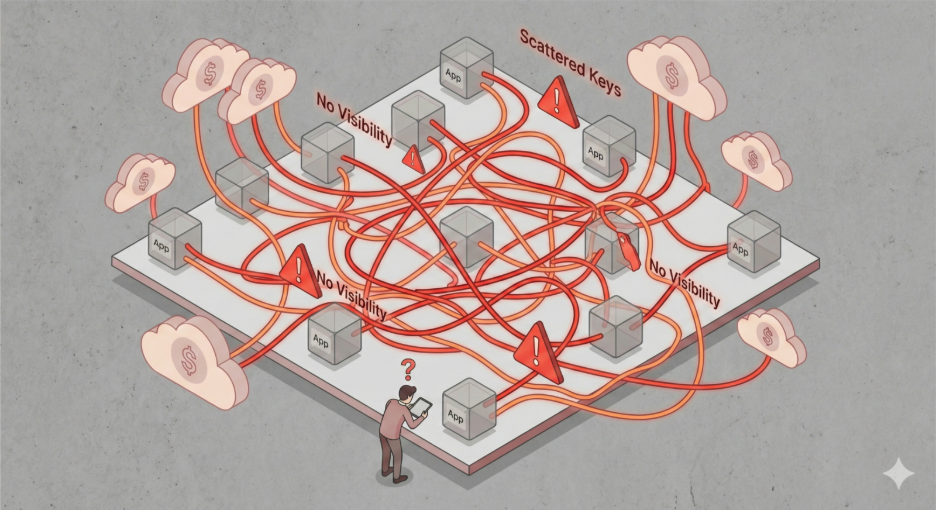

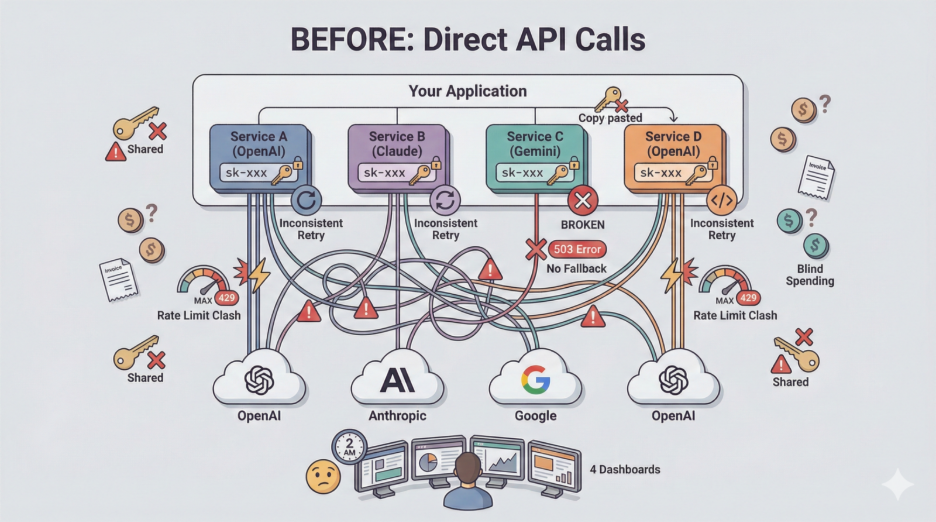

The Key Management Problem. We had one OpenAI key shared across the entire team. Developers needed access. Different services needed different models. One team member accidentally ran expensive GPT-4 calls during testing—$10 gone in minutes. No visibility into who spent what. No way to set limits. Raw API keys scattered across repositories, environment variables, and Slack messages.

The Rate Limit Disaster. Production traffic spiked. OpenAI rate limits hit. Users saw errors. There was no fallback. No retry logic. The application just… failed. At 2 AM, when traffic peaks, debugging meant checking three different dashboards across three different providers.

The Cost Surprise. End of the month. Finance asks: “LLM costs are 3x budget. What happened?” We check OpenAI’s dashboard. Then Azure. Then Google Cloud. Then Anthropic. Four different billing cycles. Four different formats. No unified view. No per-team attribution. There is no way to know which feature or which customer caused the spike.

These problems don’t appear on day one. They appear when you scale—when you have multiple services, multiple teams, multiple providers, and actual users depending on your system.

The solution isn’t better code discipline. It’s an architectural pattern: the LLM Gateway.

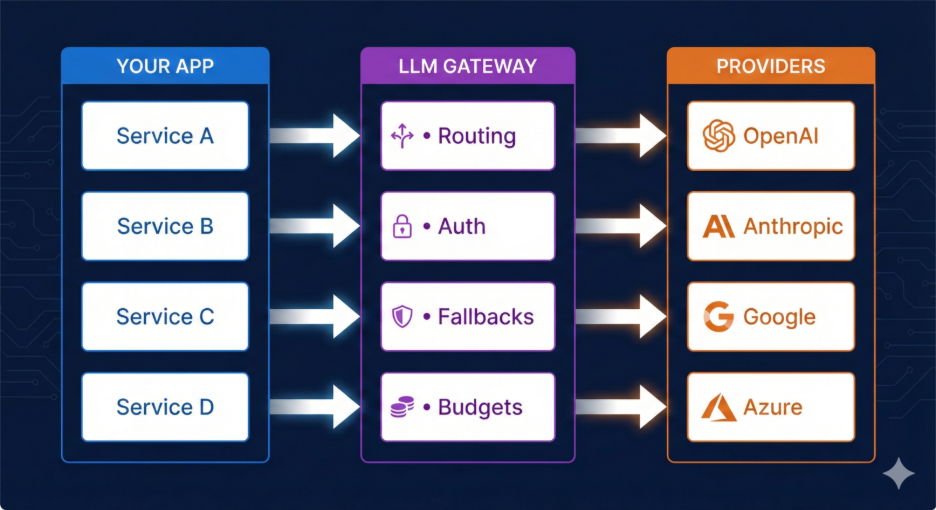

What Is an LLM Gateway?

An LLM Gateway sits between your application and LLM providers. Every request flows through it. Every response comes back through it.

Think of it as Nginx for LLMs. Your services don’t talk directly to OpenAI or Claude or Gemini. They talk to the gateway. The gateway handles everything else.

This isn’t a new pattern. API gateways have existed for decades. But LLMs introduce unique challenges—variable costs per token, different response formats across providers, rate limits that vary by model, and the need for intelligent fallbacks when a provider goes down.

The Core Problems an LLM Gateway Solves

1. SDK Fragmentation

Every provider ships their own SDK. OpenAI has one format. Anthropic has another. Google Vertex has a third. Each has different authentication, different request structures, and different response schemas.

Switching from GPT-4 to Claude means:

- Changing import statements

- Refactoring prompt formats

- Updating error handling

- Modifying response parsing

- Adjusting retry logic

An LLM Gateway abstracts this. You write to one interface—typically OpenAI-compatible—and the gateway translates for each provider. Switching models becomes a configuration change, not a code change.

# Without gateway: Provider-specific code

from openai import OpenAI

client = OpenAI(api_key=”sk-…”)

response = client.chat.completions.create(

model=”gpt-4″,

messages=[{“role”: “user”, “content”: “Hello”}]

)

# With gateway: Same code, any provider

from openai import OpenAI

client = OpenAI(

api_key=”virtual-key-123″, # Gateway’s virtual key

base_url=”https://your-gateway/v1″ # Points to gateway

)

response = client.chat.completions.create(

model=”claude-3-sonnet”, # Just change model string

messages=[{“role”: “user”, “content”: “Hello”}]

)

The application code stays identical. The gateway handles the translation.

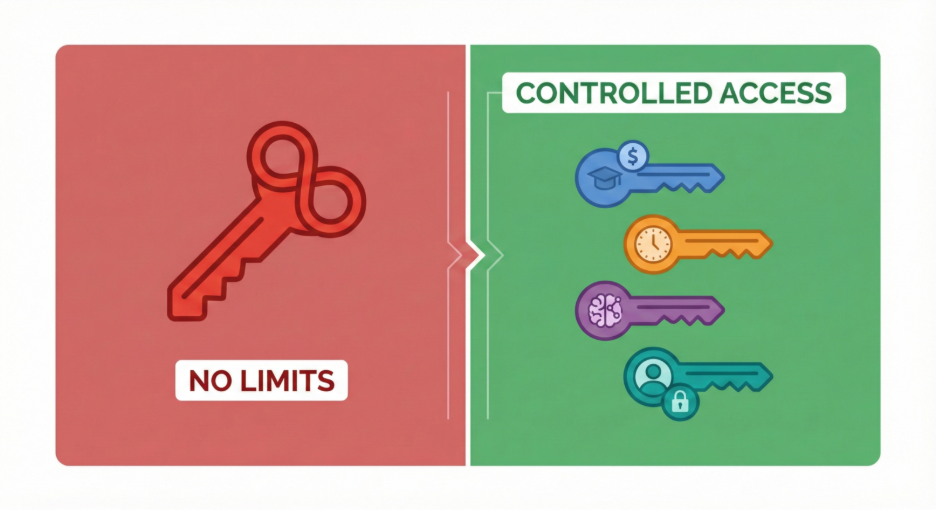

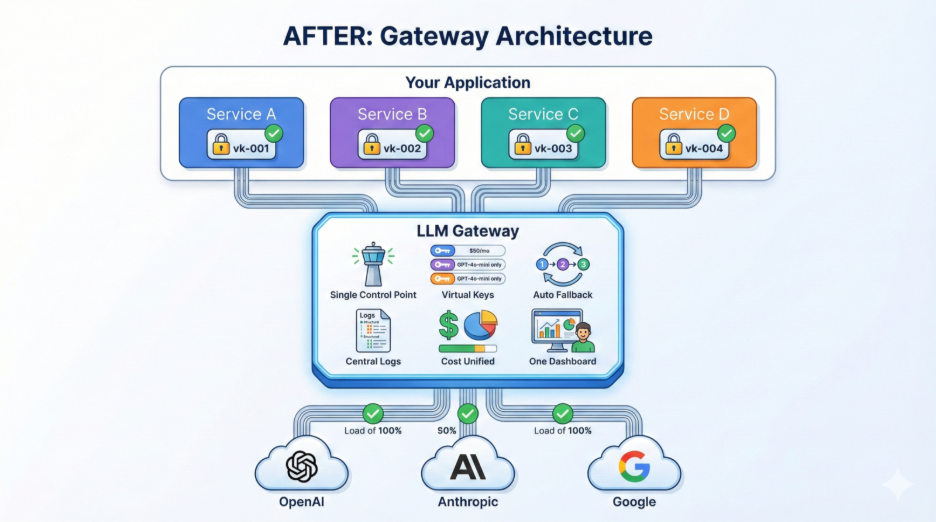

2. Virtual Keys and Access Control

Raw API keys are dangerous. They have no spending limits, no model restrictions, no audit trail. Sharing them across teams means anyone can run any model at any cost.

LLM Gateways introduce virtual keys—keys that map to your real provider keys but with constraints:

- Budget limits: “This key can spend max $50/month.”

- Model restrictions: “This key can only access GPT-4o-mini, not GPT-4.”

- Rate limits: “Max 100 requests per minute.”

- Team attribution: “All usage on this key bills to the Marketing team.”

- Expiration: “This key expires in 30 days.”

When a developer needs LLM access, you generate a virtual key with appropriate limits. If they leave the team, you revoke the key. The real provider keys never leave your infrastructure.

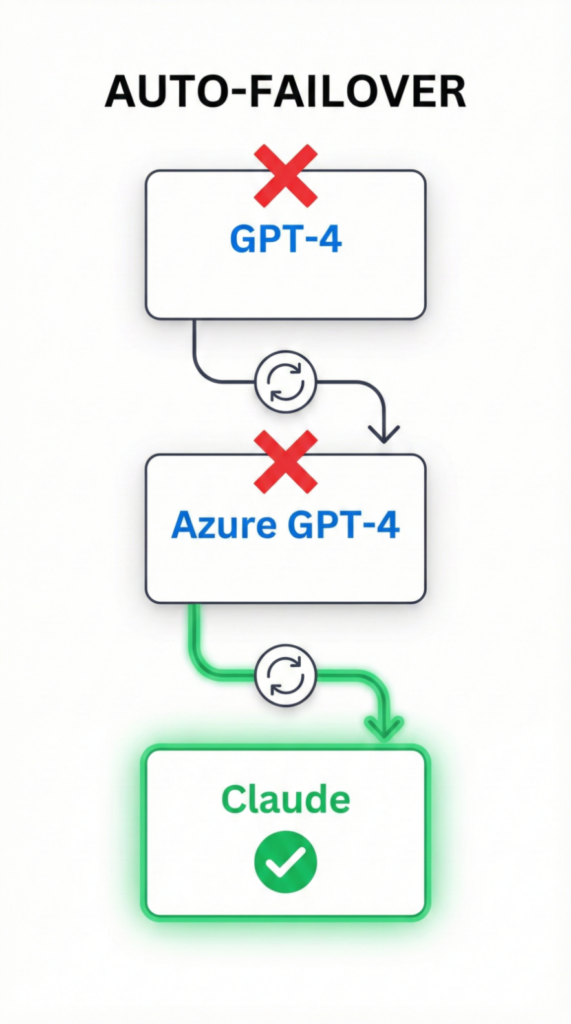

3. Intelligent Fallbacks and Retries

Providers go down. Rate limits get hit. Regions have latency spikes. Without a gateway, your application fails.

With a gateway, you define fallback chains:

The gateway handles retries automatically. If OpenAI returns a rate limit error, it waits and retries. If that fails, it routes to Azure. If Azure is down, it falls back to Claude. Your application sees a successful response—it never knows there was a problem.

Importantly, fallbacks can be configured per use case. For a creative writing feature, falling back on any capable model works. For a specialized task requiring specific model capabilities, you restrict fallbacks to equivalent models only.

4. Load Balancing Across Deployments

Large-scale deployments often use multiple provider accounts—different Azure OpenAI deployments across regions, multiple OpenAI organization accounts, and a mix of direct and marketplace subscriptions.

An LLM Gateway balances traffic across these:

┌─────────────────┐ ┌─────────────────────────────────────────┐

│ │ │ Load Balancer │

│ Application │────▶│ ┌─────────────────────────────────┐ │

│ │ │ │ Azure OpenAI (East US) – 40% │ │

└─────────────────┘ │ │ Azure OpenAI (West EU) – 40% │ │

│ │ OpenAI Direct – 20% │ │

│ └─────────────────────────────────┘ │

└─────────────────────────────────────────┘

Traffic is distributed based on availability, latency, or cost. If one deployment hits quota, traffic shifts to others automatically.

5. Centralized Cost Management

LLM costs are notoriously hard to track. Token counts vary by model. Pricing changes frequently. Different features use different models.

A gateway provides:

- Real-time spend tracking per key, per team, per model

- Budget alerts when approaching limits

- Hard cutoffs that prevent overspend

- Cost attribution to specific features or customers

- Historical analysis for capacity planning

When finance asks, “Why did costs spike?”, you have answers. You know exactly which team, which model, which day.

6. Unified Logging and Observability

Without a gateway, logs scatter across providers. OpenAI logs live in their dashboard. Anthropic logs live elsewhere. Correlating a user request across multiple LLM calls requires stitching together disparate systems.

A gateway centralizes this:

- Every request is logged with a consistent schema

- Request/response pairs correlated

- Latency tracked per provider, per model

- Error rates monitored

- Token usage recorded

Debugging becomes tractable. When a user reports a problem, you trace their request through one system.

The Architecture: Before and After

Before: Direct API Calls

After: Gateway Architecture

LangChain vs. LLM Gateway: Different Layers, Different Problems

A common question: “We already use LangChain. Why do we need an LLM Gateway?”

They solve different problems at different layers.

| Aspect | LangChain | LLM Gateway |

| Layer | Application framework | Infrastructure layer |

| Focus | How you build AI apps | How you call LLMs reliably |

| Concerns | Chains, agents, RAG, memory | Routing, auth, fallbacks, cost |

| When it matters | Development time | Runtime |

| Analogy | React (how you build UI) | Nginx (how traffic routes) |

LangChain helps you structure your application—chaining prompts, managing conversation memory, and building agents. It’s a development framework.

An LLM Gateway ensures your LLM calls succeed in production—handling failures, managing costs, enforcing limits. It’s infrastructure.

You use both together:

┌─────────────────────────────────────────────────────────────────┐

│ Your Application │

│ ┌───────────────────────────────────────────────────────────┐ │

│ │ LangChain │ │

│ │ • Prompt chains • Agents • RAG pipelines │ │

│ │ • Memory management • Output parsing │ │

│ └─────────────────────────────┬─────────────────────────────┘ │

└────────────────────────────────┼────────────────────────────────┘

│

▼

┌─────────────────────────────────┐

│ LLM Gateway │

│ • Routing • Fallbacks │

│ • Cost tracking • Auth │

└─────────────────────────────────┘

│

▼

LLM Providers

LangChain handles application logic. The gateway handles infrastructure concerns. Neither replaces the other.

Self-Hosted vs. Managed Gateways

LLM Gateways come in two flavors:

Managed Services (Portkey, Helicone, OpenRouter): You send requests to their cloud. They handle infrastructure. Faster to start, but your prompts and data flow through third-party servers. Adds latency due to an extra network hop. Pricing scales with usage.

Self-Hosted Solutions (LiteLLM, Kong AI Gateway): You deploy on your infrastructure. Your data never leaves your network. More setup effort, but full control. Better for compliance-sensitive workloads, lower latency, and predictable costs.

For production systems handling sensitive data—healthcare, finance, enterprise—self-hosted is often the only option. The compliance and data residency requirements make managed services non-viable.

The Trade-Offs: What You Should Know

An LLM Gateway isn’t free. It introduces trade-offs.

Single Point of Failure. If the gateway goes down, all LLM calls fail. This is manageable—you run multiple gateway instances behind a load balancer—but it’s additional infrastructure to maintain. You’re adding a dependency that didn’t exist before.

Latency Overhead. Every request now has an extra hop. In our testing, self-hosted gateways add 8-15ms. Managed gateways can add 50-100ms depending on the region. For most applications, this is negligible compared to LLM inference time (500ms-2s). For real-time voice applications where every millisecond matters, it’s worth measuring.

Infrastructure Burden. Someone has to run the gateway. That means deployment pipelines, monitoring, scaling, and upgrades. For small teams, this overhead might not be worth it. For larger teams already running Kubernetes and microservices, it’s a marginal additional effort.

Lowest Common Denominator. Gateways standardize on a common interface—usually OpenAI’s format. Provider-specific features (Gemini’s native video processing, Claude’s extended context caching) might not be immediately supported. You sometimes lose access to cutting-edge capabilities until the gateway adds support.

Open Source Volatility. Open-source gateways update frequently. New features are great, but breaking changes happen. Version upgrades require testing. We’ve had deployments break after updates because a config format changed.

These trade-offs are real. For a solo developer or a single-model prototype, direct API calls are simpler. The gateway pattern pays off when you have multiple services, multiple teams, or multiple providers—when the coordination cost exceeds the gateway’s overhead.

When to Use an LLM Gateway

You need a gateway if:

- Multiple services call LLMs independently

- Multiple team members need LLM access with different limits

- You use (or plan to use) multiple LLM providers

- Cost attribution matters for billing or budgeting

- Compliance requires audit trails and access controls

- Uptime matters—you can’t afford provider outages taking down your app

You probably don’t need a gateway if:

- Single application, single developer

- One LLM provider, no plans to change

- Prototype or MVP stage

- Cost tracking isn’t a concern yet

The gateway adds value proportional to complexity. Simple setups don’t need it. Complex setups can’t function without it.

Conclusion

Direct API calls work—until they don’t. The problems emerge gradually: scattered keys, surprise costs, production failures at 2 AM, and debugging across five dashboards.

An LLM Gateway addresses these systematically. It’s not about any single feature—virtual keys, fallbacks, cost tracking, load balancing—it’s about having a single layer that handles LLM infrastructure concerns so your application code can focus on application logic.

The pattern isn’t new. We’ve had API gateways, service meshes, and load balancers for years. LLM Gateways are the same principle applied to a new problem domain.

If you’re scaling an AI application—multiple models, multiple teams, production traffic—this layer isn’t optional. It’s infrastructure.

Partner with 47Billion for Production-Grade LLM Infrastructure

At 47Billion, we deliver end-to-end LLM Gateway solutions for teams scaling AI applications:

- Comprehensive Architecture: Virtual keys → Multi-provider routing → Intelligent fallbacks → Unified observability.

- Validated Deployments: Self-hosted LiteLLM, load-balanced Azure/OpenAI clusters, and cost-optimized model pipelines.

- Enterprise-Grade Features: Budget controls, audit trails, rate limit coordination, and compliance-ready logging.

Explore scalable, resilient LLM infrastructure for multi-provider AI systems: 47Billion Solutions