In today’s enterprises, thousands of hours of meetings, presentations, interviews, and conferences are recorded daily. Yet raw audio or video remains useless without intelligent processing that answers critical questions:

- Who said what?

- When did they say it?

- Which speaker owns which discussion point?

- How do we transform chaotic multi-speaker conversations into actionable, structured insights?

These challenges drive modern automated meeting and conference summarization platforms. Leading tools promise speaker-wise action items, role-labelled summaries, participant sentiment analysis, cross-conversation analytics, contribution heatmaps, and precise decision extraction.

For these advanced features to deliver reliable results, two foundational elements must perform flawlessly: consistent speaker voice uniformity (through audio normalization and stable embeddings) and accurate direction detection (via Direction of Arrival or DOA estimation, often powered by beamforming). Without them, downstream AI tasks—from transcription to semantic analysis—break down entirely.

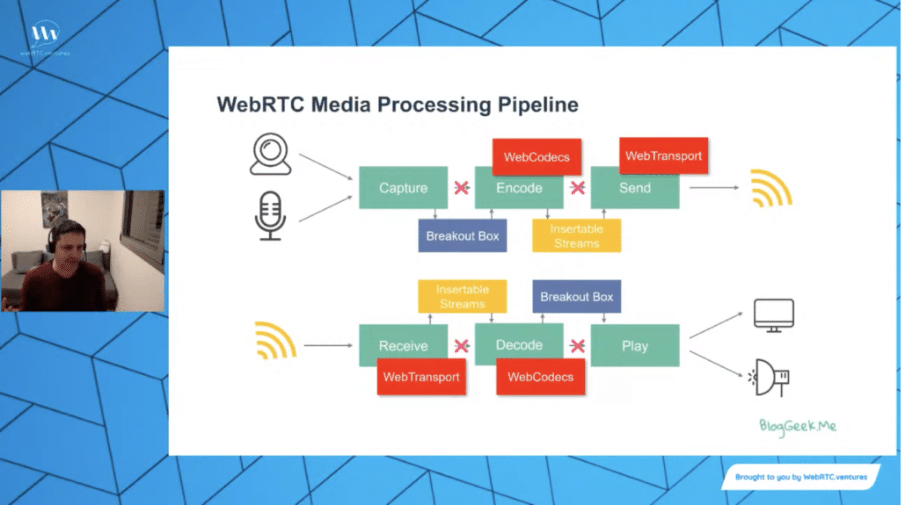

Source: webrtc.ventures

A modern conference room equipped with beamforming microphone arrays for precise audio capture.

The Hidden Challenge: Voice Variability in Real-World Conferences

Real-world conference audio is far from ideal:

- Speakers sit at varying distances from microphones.

- Participants switch devices (laptop to phone to room system).

- Audio formats differ across browsers and platforms (WebM, AAC, MP4).

- Background noise, network jitter, reverberations, and overlapping speech distort signals.

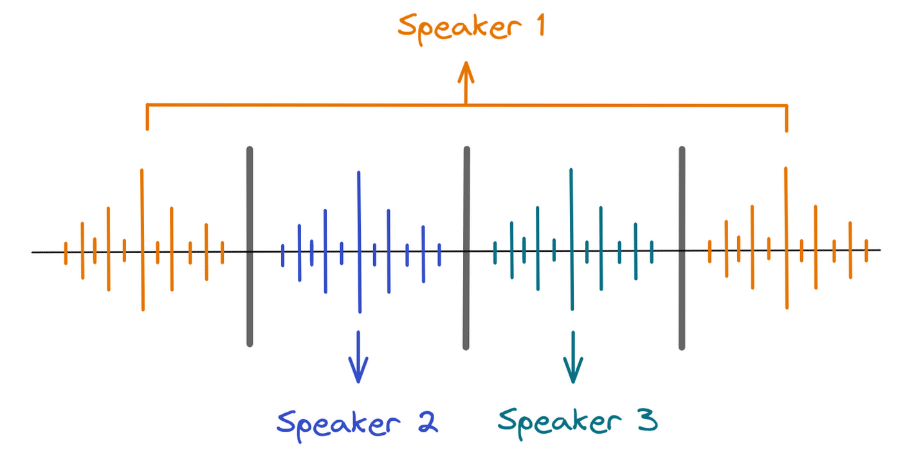

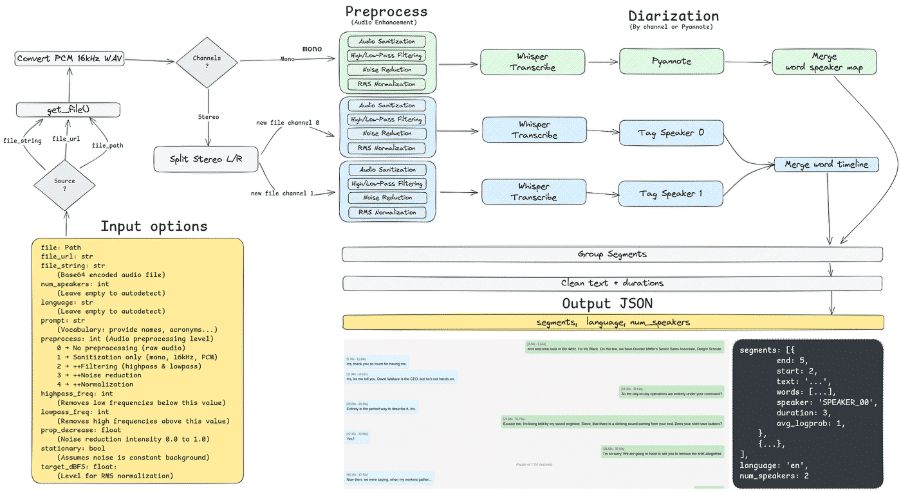

Speaker diarization—the process of partitioning audio into segments labelled by speaker—must maintain identity across these variations. Effective diarization dramatically improves transcription readability and conversational understanding, especially in meetings.

Without robust handling, systems misattribute speech, leading to fragmented or inaccurate outputs.

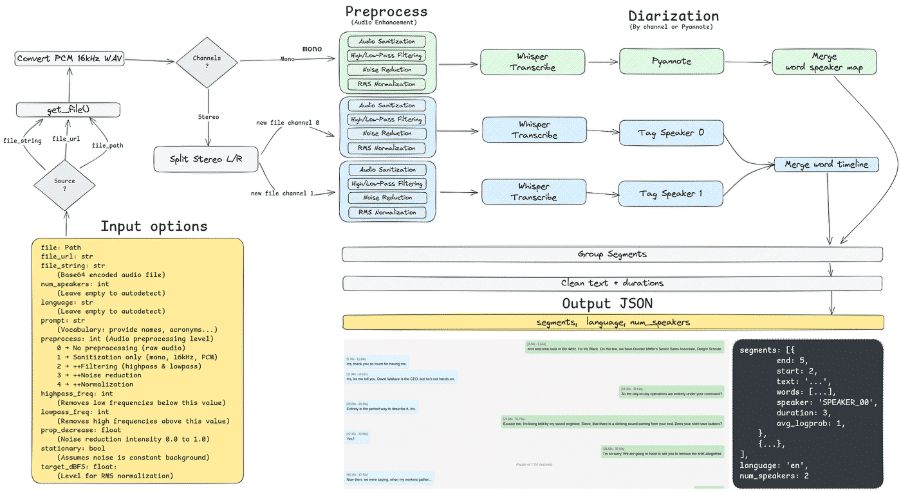

Source:

A typical speaker diarization pipeline, showing segmentation, embedding extraction, and clustering.

Why Voice Uniformity Is Essential for Meeting Intelligence

AI systems rely on voice embeddings—numerical representations (fingerprints) of a speaker’s voice—to track identity. Variations in bitrate, codec, noise, mic distance, or device can cause embeddings to drift, making the system treat the same speaker as multiple people.

This breaks:

- Diarization accuracy

- Speaker attribution

- Action item assignment

- Sentiment analysis per participant

- Talking-time heatmaps and contribution metrics

Audio normalization (including loudness normalization to standards like -23 LUFS, ensures consistent perceived volume and spectral characteristics, stabilizing embeddings.

Source: medium.com

Visualization of voice embeddings clustered by speaker, showing how uniformity enables tight, distinct groups.

Why Direction Detection Matters Even More

In conferences, overlapping speech is common. Direction of Arrival (DOA) estimation, often implemented via beamforming microphone arrays, pinpoints the spatial origin of sound. This helps separate overlapping voices, track active speakers, and associate audio streams with physical positions.

Beamforming focuses on sound from a specific direction while suppressing noise and echoes, significantly improving diarization in noisy or reverberant rooms. Techniques like Time Difference of Arrival (TDOA) or Generalized Cross-Correlation with Phase Transform (GCC-PHAT) are commonly used in multi-microphone setups.

Without DOA:

- Speakers merge into one identity

- Wrong attributions occur

- Overlapping speech becomes garbled

- Summaries lose context and accuracy

Source: https://emeet.com/blogs/content/doa-direction-of-arrival-for-conference-speakerphone

Illustration of time difference of arrival (TDOA) in a microphone array for DOA estimation.

Browser & Codec Fragmentation: The Silent Enemy

Browser and platform differences create significant hurdles in audio consistency. While WebRTC standardizes on the Opus codec for real-time communication across major browsers (Chrome, Firefox, Edge, and modern Safari), challenges persist in mixed or recorded scenarios:

- Chrome/Firefox primarily use WebM containers with Opus for optimal speech handling.

- Safari historically favored AAC in MP4 containers, though recent versions support Opus in WebRTC negotiations.

- Legacy systems or non-WebRTC recordings may fall back to WAV, PCM, or other formats.

When participants switch devices mid-meeting (e.g., from desktop Chrome to mobile Safari) or when recordings are processed post-session, codec mismatches cause spectral shifts and embedding drift. This leads to the AI confusing the same speaker across segments—destroying continuity in long meetings.

Real-world example: A speaker joins via Chrome (Opus-encoded) but switches to iPhone (potentially AAC-encoded stream). Without aggressive normalization, voice embeddings diverge, resulting in split identities like “Speaker 1” and “Speaker 3” for the same person.

Standardizing all inputs to a uniform format—ideally resampling and re-encoding to Opus-like characteristics—is critical to prevent this fragmentation.

Why WebRTC + Opus Is Emerging as the Gold Standard

WebRTC, combined with the Opus codec, provides the most reliable foundation for real-time and near-real-time conference intelligence. Opus is universally supported in WebRTC across browsers and excels in speech scenarios due to:

- Adaptive bitrate (6–510 kbps), dynamically adjusting to network conditions without quality drops.

- Low latency optimization, ideal for interactive conferences.

- Superior packet loss concealment and forward error correction (FEC).

- Built-in noise suppression and handling of variable bandwidth.

- High fidelity at low bitrates, outperforming older codecs like G.711 or AAC in voice clarity.

Every participant’s audio flows through the same WebRTC pipeline, including echo cancellation, jitter buffering, and normalization—ensuring uniform embeddings from the start.

Source: opus-codec.org

Comparison chart showing Opus outperforming other codecs in quality across bitrates.

Challenges like firewall traversal (via TURN servers) exist, but for multi-speaker analytics demanding consistency, WebRTC + Opus remains unmatched.

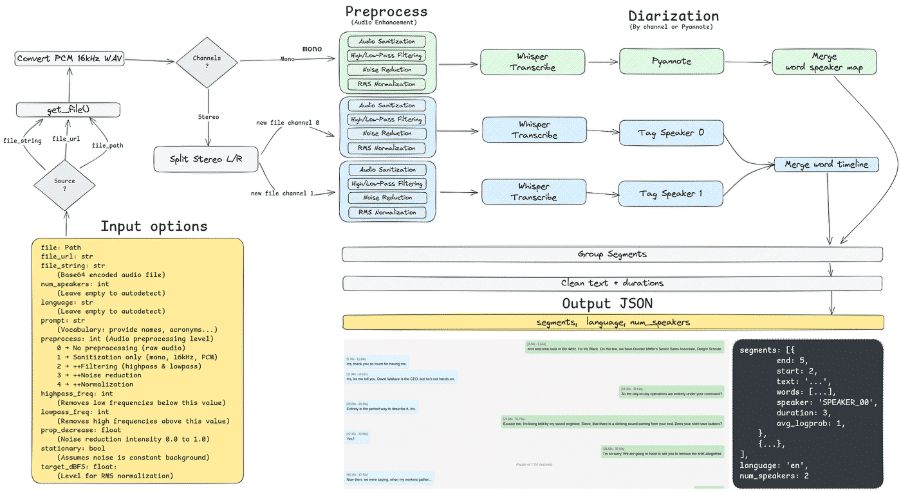

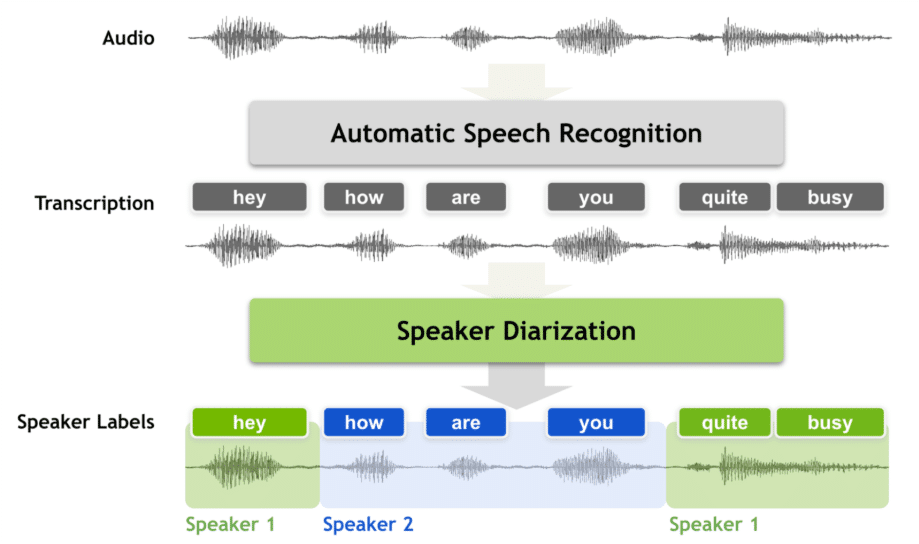

The Real Pipeline for Enterprise-Grade Conference Intelligence

A robust, enterprise-ready system integrates multiple stages for accuracy:

- Voice Activity Detection (VAD): Identifies speech segments vs. silence/noise.

- Audio Normalization & Preprocessing: Resamples, normalizes loudness, and suppresses noise/echo.

- Beamforming & DOA Estimation: Uses microphone arrays to spatially separate sources (e.g., MVDR beamforming or neural beamformers).

- Speaker Embedding Extraction: Generates fixed-length vectors (e.g., via ECAPA-TDNN models).

- Clustering & Diarization: Groups embeddings (agglomerative hierarchical clustering) and refines with DOA cues.

- Overlapping Speech Handling: Resolves overlaps using spatial or neural separation.

- ASR Transcription: Converts cleaned, diarized audio to text.

- LLM-Driven Analysis: Infers roles, extracts actions, summarizes per speaker.

Integrating DOA reduces diarization error rates significantly in meeting scenarios, especially with microphone arrays.

Source: docs.openvino.ai

Advanced speaker diarization pipeline flowchart incorporating neural embeddings and spatial processing.

Building Scalable Solutions: Partner with Experts

Delivering these capabilities at enterprise scale requires deep expertise in audio processing, machine learning, and real-time systems. Companies like 47Billion specialize in Data Science, LLM integration, and Agentic AI solutions, helping organizations build custom, robust conference intelligence platforms that handle multi-speaker complexity with high accuracy and reliability.

Conclusion

Multi-speaker conference intelligence transcends simple transcription. True enterprise value emerges when systems master audio normalization, voice uniformity, direction detection, and diarization—unlocking precise speaker-wise insights, reliable action items, and deep behavioral analytics.

Without these pillars, meeting AI remains shallow and unreliable. With them—leveraging standards like WebRTC/Opus, beamforming, and advanced pipelines—we unlock a new era of structured, trustworthy conference intelligence.

Ready to transform your organization’s meetings? Partner with experts like 47Billion for scalable Data Science, LLM, and Agentic AI solutions.